In this tutorial, we will show you how to install Apache Hadoop on Ubuntu 20.04 LTS. For those of you who didn’t know, Apache Hadoop is a powerful open-source framework that enables distributed processing of large datasets across clusters of computers. It has become an essential tool for big data processing, allowing organizations to store, manage, and analyze vast amounts of structured and unstructured data efficiently.

This article assumes you have at least basic knowledge of Linux, know how to use the shell, and most importantly, you host your site on your own VPS. The installation is quite simple and assumes you are running in the root account, if not you may need to add ‘sudo‘ to the commands to get root privileges. I will show you the step-by-step installation of Flask on Ubuntu 20.04 (Focal Fossa). You can follow the same instructions for Ubuntu 18.04, 16.04, and any other Debian-based distribution like Linux Mint.

Prerequisites

- A server running one of the following operating systems: Ubuntu 20.04, 18.04, 16.04, and any other Debian-based distribution like Linux Mint.

- It’s recommended that you use a fresh OS install to prevent any potential issues.

- SSH access to the server (or just open Terminal if you’re on a desktop).

- A

non-root sudo useror access to theroot user. We recommend acting as anon-root sudo user, however, as you can harm your system if you’re not careful when acting as the root.

Install Apache Hadoop on Ubuntu 20.04 LTS Focal Fossa

Step 1. Update Your System.

Make sure your Ubuntu system is up-to-date with the latest security patches and updates. Open a terminal and run the following commands:

sudo apt update sudo apt upgrade

Step 2. Installing Java.

In order to run Hadoop, you need to have Java 11 installed on your machine. To do so, use the following command:

sudo apt install default-jdk default-jre

Once installed, you can verify the installed version of Java with the following command:

java -version

Step 3. Create Hadoop User.

First, create a new user named Hadoop with the following command:

sudo addgroup hadoopgroup sudo adduser —ingroup hadoopgroup hadoopuser

Next, log in with a Hadoop user and generate an SSH key pair with the following command:

su - hadoopuser ssh-keygen -t rsa cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys chmod 0600 ~/.ssh/authorized_keys

After that, verify the passwordless SSH with the following command:

ssh localhost

Once you log in without a password, you can proceed to the next step.

Step 4. Installing Apache Hadoop on Ubuntu 20.04.

Now we have downloaded the latest stable version of Apache Hadoop, At the moment of writing this article it is version 3.4.0:

su - hadoop wget https://www.apache.org/dyn/closer.cgi/hadoop/common/hadoop-3.4.0/hadoop-3.4.0-src.tar.gz tar -xvzf hadoop-3.4.0-src.tar.gz

Next, move the extracted directory to the /usr/local/:

sudo mv hadoop-3.4.0-src /usr/local/hadoop sudo mkdir /usr/local/hadoop/logs

We change the ownership of the Hadoop directory to Hadoop:

sudo chown -R hadoop:hadoop /usr/local/hadoop

Step 5. Configure Apache Hadoop.

Setting up the environment variables. Edit ~/.bashrc the file and append the following values at end of the file:

nano ~/.bashrc

Add the following lines:

export HADOOP_HOME=/usr/local/hadoop export HADOOP_INSTALL=$HADOOP_HOME export HADOOP_MAPRED_HOME=$HADOOP_HOME export HADOOP_COMMON_HOME=$HADOOP_HOME export HADOOP_HDFS_HOME=$HADOOP_HOME export YARN_HOME=$HADOOP_HOME export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native export PATH=$PATH:$HADOOP_HOME/sbin:$HADOOP_HOME/bin export HADOOP_OPTS="-Djava.library.path=$HADOOP_HOME/lib/native"

Apply environmental variables to the currently running session:

source ~/.bashrc

Next, you will need to define Java environment variables in hadoop-env.sh to configure YARN, HDFS, MapReduce, and Hadoop-related project settings:

sudo nano $HADOOP_HOME/etc/hadoop/hadoop-env.sh

Add the following lines:

export JAVA_HOME=/usr/lib/jvm/java-11-openjdk-amd64 export HADOOP_CLASSPATH+=" $HADOOP_HOME/lib/*.jar"

You can now verify the Hadoop version using the following command:

hadoop version

Step 6. Configure core-site.xml file.

Open the core-site.xml file in a text editor:

sudo nano $HADOOP_HOME/etc/hadoop/core-site.xml

Add the following lines:

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://0.0.0.0:9000</value>

<description>The default file system URI</description>

</property>

</configuration>

Step 7. Configure hdfs-site.xml File.

Use the following command to open the hdfs-site.xml file for editing:

sudo nano $HADOOP_HOME/etc/hadoop/hdfs-site.xml

Add the following lines:

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.name.dir</name>

<value>file:///home/hadoop/hdfs/namenode</value>

</property>

<property>

<name>dfs.data.dir</name>

<value>file:///home/hadoop/hdfs/datanode</value>

</property>

</configuration>

Step 8. Configure mapred-site.xml File.

Use the following command to access the mapred-site.xml file:

sudo nano $HADOOP_HOME/etc/hadoop/mapred-site.xml

Add the following lines:

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

Step 9. Configure yarn-site.xml File.

Open the yarn-site.xml file in a text editor:

sudo nano $HADOOP_HOME/etc/hadoop/yarn-site.xml

Add the following lines:

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

</configuration>

Step 10. Format HDFS NameNode.

Now we log in with a Hadoop user and format the HDFS NameNode with the following command:

su - hadoop hdfs namenode -format

Step 11. Start the Hadoop Cluster.

Now start the NameNode and DataNode with the following command:

start-dfs.sh

Then, start YARN resource and nodemanagers:

start-yarn.sh

You should observe the output to ascertain that it tries to start datanode on slave nodes one by one. To check if all services are started well using ‘jps‘ command:

jps

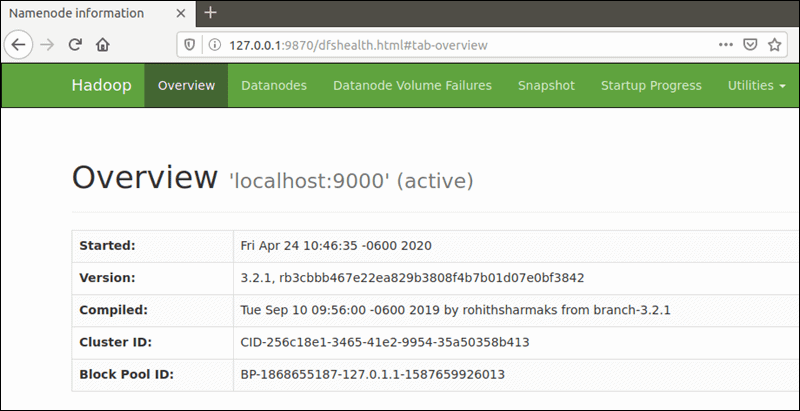

Step 12. Accessing Apache Hadoop.

The default port number 9870 gives you access to the Hadoop NameNode UI:

http://your-server-ip:9870

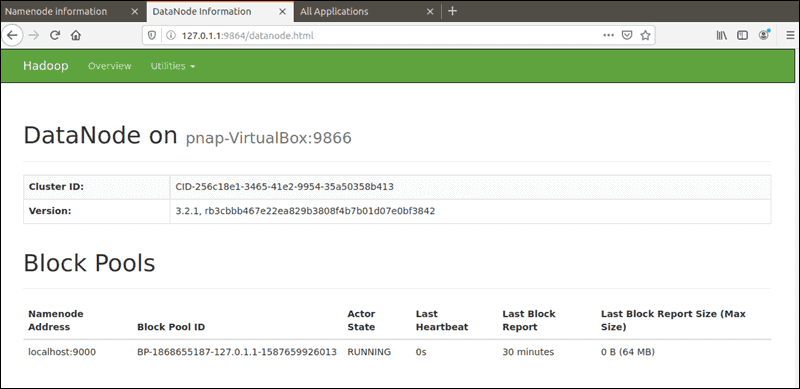

The default port 9864 is used to access individual DataNodes directly from your browser:

http://your-server-ip:9864

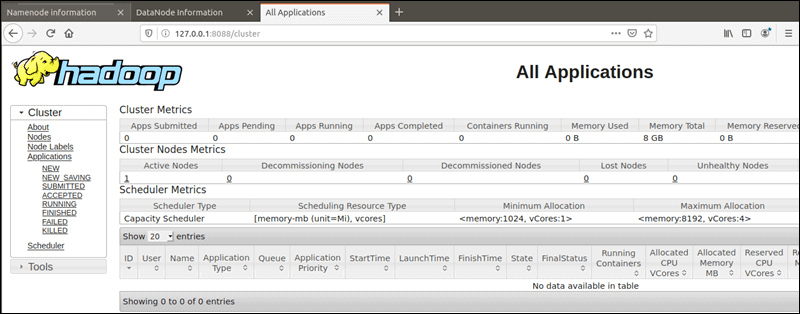

The YARN Resource Manager is accessible on port 8088:

http://your-server-ip:8088

Congratulations! You have successfully installed Hadoop. Thanks for using this tutorial for installing Apache Hadoop on your Ubuntu 20.04 LTS Focal Fossa system. For additional help or useful information, we recommend you to check the official Apache Hadoop website.