How To Install Apache Spark on Fedora 38

In this tutorial, we will show you how to install Apache Spark on Fedora 38. For those of you who didn’t know, Apache Spark, an open-source, distributed computing system, has revolutionized the world of big data processing and analytics. It offers lightning-fast data processing capabilities, making it a go-to choice for data engineers and data scientists.

This article assumes you have at least basic knowledge of Linux, know how to use the shell, and most importantly, you host your site on your own VPS. The installation is quite simple and assumes you are running in the root account, if not you may need to add ‘sudo‘ to the commands to get root privileges. I will show you the step-by-step installation of the Apache Spark on a Fedora 38.

Prerequisites

- A server running one of the following operating systems: Fedora 38.

- It’s recommended that you use a fresh OS install to prevent any potential issues.

- SSH access to the server (or just open Terminal if you’re on a desktop).

- An active internet connection. You’ll need an internet connection to download the necessary packages and dependencies for Apache Spark.

- A

non-root sudo useror access to theroot user. We recommend acting as anon-root sudo user, however, as you can harm your system if you’re not careful when acting as the root.

Install Apache Spark on Fedora 38

Step 1. Before we can install Apache Spark on Fedora 38, it’s important to ensure that our system is up-to-date with the latest packages. This will ensure that we have access to the latest features and bug fixes and that we can install Apache Spark without any issues:

sudo dnf update

Step 2. Installing Java.

Apache Spark relies on the Java Development Kit (JDK) for its functionality. To install OpenJDK 11, execute the following command:

sudo dnf install java-11-openjdk

Now, verify the installation by checking the Java version:

java -version

Step 3. Installing Apache Spark on Fedora 38.

Visit the official Apache Spark website and choose the Spark version that best suits your requirements. For most users, the pre-built version of Hadoop is suitable:

wget https://www.apache.org/dyn/closer.lua/spark/spark-3.5.0/spark-3.5.0-bin-hadoop3.tgz

After downloading Spark, extract the archive using the following command:

tar -xvf spark-3.5.0-bin-hadoop3.tgz

Next, move the extracted directory to the /opt directory:

mv spark-3.5.0-bin-hadoop3 /opt/spark

Then, add a user to run Spark then set the ownership of the Spark directory:

useradd spark chown -R spark:spark /opt/spark

Step 4. Create Systemd Service.

Now we create a systemd service file to manage the Spark master service:

nano /etc/systemd/system/spark-master.service

Add the following file:

[Unit] Description=Apache Spark Master After=network.target [Service] Type=forking User=spark Group=spark ExecStart=/opt/spark/sbin/start-master.sh ExecStop=/opt/spark/sbin/stop-master.sh [Install] WantedBy=multi-user.target

Save and close the file, then create a service file for Spark slave:

nano /etc/systemd/system/spark-slave.service

Add the following configurations.

[Unit] Description=Apache Spark Slave After=network.target [Service] Type=forking User=spark Group=spark ExecStart=/opt/spark/sbin/start-slave.sh spark://your-IP-server:7077 ExecStop=/opt/spark/sbin/stop-slave.sh [Install] WantedBy=multi-user.target

Save and close the file, then reload the systemd daemon.

sudo systemctl daemon-reload sudo systemctl start spark-master sudo systemctl enable spark-master

Step 5. Configure Firewall.

First, you need to identify the ports that Apache Spark uses for its various components. Typically, the essential ports you should open are:

- Spark Master Web UI: Port 8080 (or the port you’ve configured)

- Spark Master Port: 7077 (or the port you’ve configured)

- Spark Worker Ports: Random ports within a specified range (default is 1024-65535)

To open the Spark Master and Web UI ports (e.g., 8080 and 7077), you can use the firewall-cmd command as follows:

sudo firewall-cmd --zone=public --add-port=8080/tcp --permanent sudo firewall-cmd --zone=public --add-port=7077/tcp --permanent

After adding the necessary rules, you should reload the firewall for the changes to take effect:

sudo firewall-cmd --reload

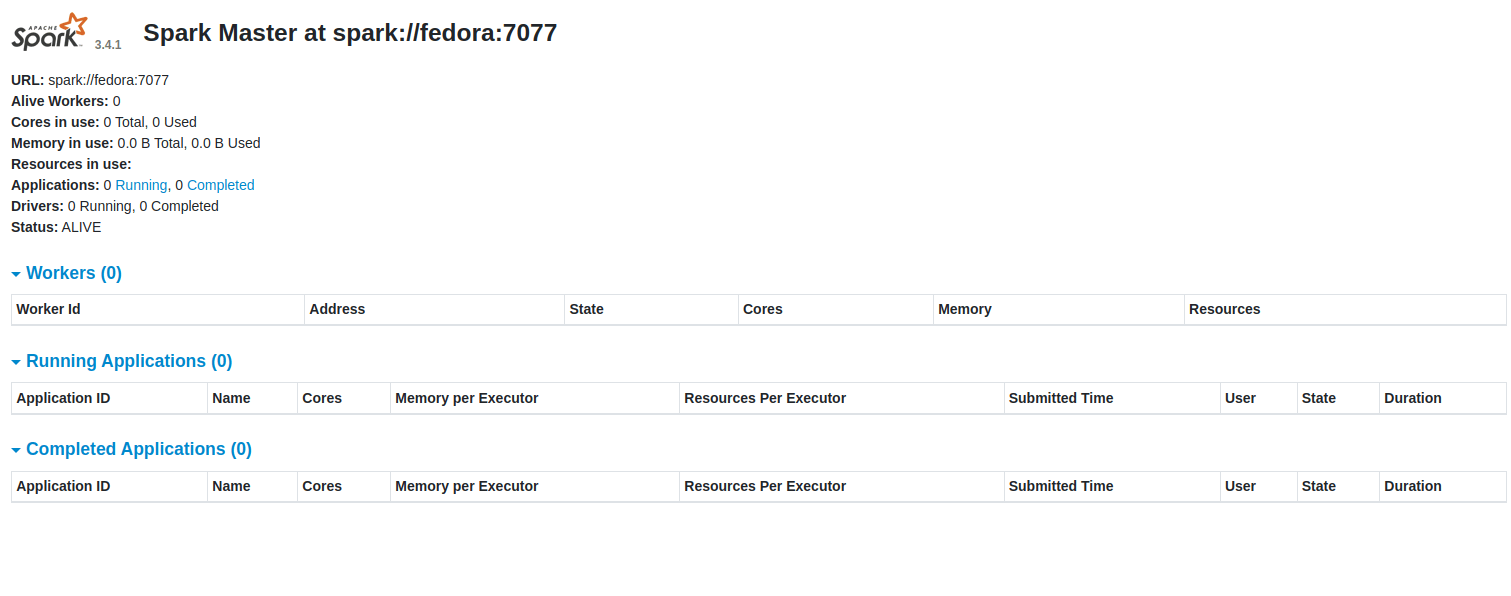

Step 6. Accessing Apache Spark Web Interface.

To verify that Spark is correctly installed and the cluster is running, now open a web browser and access the Spark web UI by entering the following URL:

http://your-IP-address:8080

You should see the Spark dashboard on the following screen:

Congratulations! You have successfully installed Apache Spark. Thanks for using this tutorial for installing Apache Spark on your Fedora 38 system. For additional help or useful information, we recommend you check the official Spark website.