How To Install ELK Stack on AlmaLinux 10

The ELK Stack has become the cornerstone of modern log management and data analytics infrastructure. This powerful combination of Elasticsearch, Logstash, and Kibana transforms raw log data into actionable insights, enabling system administrators to monitor applications, troubleshoot issues, and maintain security across enterprise environments. AlmaLinux 10, with its enterprise-grade stability and Red Hat compatibility, provides an ideal foundation for deploying a robust ELK Stack implementation that can handle demanding production workloads.

Centralized logging solutions have evolved from nice-to-have tools into mission-critical infrastructure components. Organizations generate massive volumes of log data from servers, applications, network devices, and security systems. Without proper aggregation and analysis capabilities, this valuable information remains scattered and underutilized. The ELK Stack addresses this challenge by creating a unified platform where logs flow seamlessly from collection to visualization, empowering teams to detect anomalies, optimize performance, and respond to incidents with unprecedented speed and accuracy.

Understanding the ELK Stack Architecture

The ELK Stack operates as an integrated ecosystem where each component plays a specialized role in the log management pipeline. Elasticsearch serves as the distributed search and analytics engine, storing indexed log data across multiple nodes for high availability and scalability. This NoSQL database excels at full-text search capabilities, allowing administrators to query terabytes of log data in milliseconds while supporting complex aggregations and real-time analytics.

Logstash functions as the data processing pipeline, ingesting logs from various sources including files, databases, message queues, and network protocols. It transforms raw log entries through parsing, filtering, and enrichment operations before forwarding processed data to Elasticsearch. Logstash’s plugin architecture supports hundreds of input, filter, and output plugins, making it incredibly versatile for diverse logging requirements.

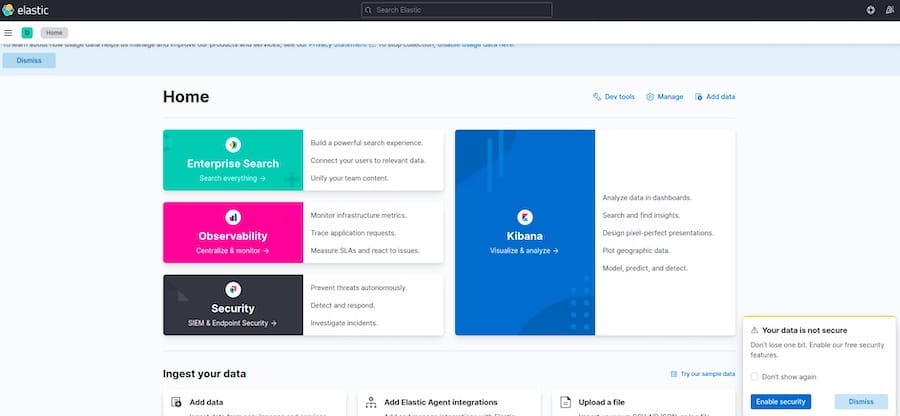

Kibana provides the web-based visualization and management interface that transforms stored data into meaningful dashboards, charts, and reports. Users can create custom visualizations, build operational dashboards, and perform interactive data exploration without requiring technical expertise. Kibana also handles user authentication, role-based access control, and administrative functions for the entire stack.

The data flow begins when log sources generate events that are collected by lightweight shippers like Filebeat. These events travel through Logstash for processing and enrichment before being stored in Elasticsearch indices. Kibana then queries Elasticsearch to present data through intuitive visualizations, completing the journey from raw logs to actionable intelligence.

Prerequisites and System Requirements

Successful ELK Stack deployment requires careful attention to hardware and software prerequisites. The minimum hardware configuration includes 8GB of RAM, though 16GB or more is strongly recommended for production environments. Memory allocation directly impacts Elasticsearch performance, as the search engine relies heavily on heap memory and file system caching for optimal query response times.

CPU requirements scale with data volume and query complexity. A minimum of 4 CPU cores provides adequate performance for small to medium deployments, while high-throughput environments may require 8 or more cores per node. Multi-core processors enable Elasticsearch to parallelize search operations and handle concurrent queries efficiently.

Storage considerations are equally critical. Allocate at least 50GB of free disk space for initial deployment, with SSD storage preferred for production systems. Elasticsearch benefits significantly from fast I/O operations, particularly during indexing and search activities. Plan for additional storage based on log retention requirements and expected daily ingestion volumes.

Network connectivity requirements include dedicated ports for inter-component communication. Elasticsearch uses port 9200 for HTTP API access and 9300 for internal cluster communication. Kibana operates on port 5601 by default, while Logstash receives data on port 5044 for Beats input. Configure firewall rules to allow traffic on these ports while restricting access to trusted networks only.

Software prerequisites begin with a fresh AlmaLinux 10 installation with root or sudo administrative privileges. The system should have current package repositories configured and basic network connectivity established. Java Development Kit (JDK) installation is mandatory, with OpenJDK 21 providing optimal compatibility with current Elastic Stack versions.

Preparing AlmaLinux 10 Environment

Environment preparation establishes the foundation for a successful ELK Stack deployment. Begin by updating the system to ensure all packages reflect the latest security patches and bug fixes:

sudo dnf update -y

sudo dnf install -y wget curl vimInstall OpenJDK 21, which provides the Java runtime environment required by all ELK Stack components:

sudo dnf install -y java-21-openjdk java-21-openjdk-develVerify the Java installation and set the JAVA_HOME environment variable:

java -version

sudo echo 'export JAVA_HOME=/usr/lib/jvm/java-21-openjdk' >> /etc/environment

source /etc/environmentConfigure system limits to accommodate Elasticsearch’s resource requirements. Edit /etc/security/limits.conf to increase file descriptor and memory limits:

echo 'elasticsearch soft nofile 65536' >> /etc/security/limits.conf

echo 'elasticsearch hard nofile 65536' >> /etc/security/limits.conf

echo 'elasticsearch soft memlock unlimited' >> /etc/security/limits.conf

echo 'elasticsearch hard memlock unlimited' >> /etc/security/limits.confDisable swap to prevent Elasticsearch performance degradation:

sudo swapoff -a

sudo sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstabConfigure firewall rules to allow ELK Stack communication while maintaining security:

sudo firewall-cmd --permanent --add-port=9200/tcp

sudo firewall-cmd --permanent --add-port=5601/tcp

sudo firewall-cmd --permanent --add-port=5044/tcp

sudo firewall-cmd --reloadInstalling and Configuring Elasticsearch

Elasticsearch installation begins with adding the official Elastic repository to the system. Import the GPG signing key to verify package authenticity:

sudo rpm --import https://artifacts.elastic.co/GPG-KEY-elasticsearchCreate the repository configuration file:

cat << 'EOF' | sudo tee /etc/yum.repos.d/elasticsearch.repo

[elasticsearch]

name=Elasticsearch repository for 8.x packages

baseurl=https://artifacts.elastic.co/packages/8.x/yum

gpgcheck=1

gpgkey=https://artifacts.elastic.co/GPG-KEY-elasticsearch

enabled=0

autorefresh=1

type=rpm-md

EOFInstall Elasticsearch using the DNF package manager:

sudo dnf install -y --enablerepo=elasticsearch elasticsearchThe installation process creates system users, directories, and initial configuration files. Post-installation, the Elasticsearch directory structure includes /etc/elasticsearch for configuration files, /var/lib/elasticsearch for data storage, and /var/log/elasticsearch for log files.

Configure Elasticsearch by editing /etc/elasticsearch/elasticsearch.yml. Set the cluster name and node name for identification:

cluster.name: elk-cluster

node.name: elk-node-1

path.data: /var/lib/elasticsearch

path.logs: /var/log/elasticsearch

network.host: localhost

http.port: 9200

discovery.type: single-nodeConfigure Java heap memory allocation based on available system RAM. Edit /etc/elasticsearch/jvm.options:

-Xms4g

-Xmx4gEnable and start the Elasticsearch service:

sudo systemctl daemon-reload

sudo systemctl enable elasticsearch

sudo systemctl start elasticsearchVerify successful installation by checking service status and API connectivity:

sudo systemctl status elasticsearch

curl -X GET "localhost:9200/"Generate and save the built-in user passwords for security configuration:

sudo /usr/share/elasticsearch/bin/elasticsearch-setup-passwords autoRecord the generated passwords securely, as they will be required for configuring other stack components and user authentication.

Installing and Configuring Logstash

Logstash installation follows a similar repository-based approach. Install the package from the Elastic repository:

sudo dnf install -y --enablerepo=elasticsearch logstashLogstash operates through pipeline configurations that define data flow from inputs through filters to outputs. Create a basic configuration file /etc/logstash/conf.d/01-beats-input.conf for receiving data from Beats shippers:

input {

beats {

port => 5044

}

}Configure output to send processed data to Elasticsearch. Create /etc/logstash/conf.d/30-elasticsearch-output.conf:

output {

elasticsearch {

hosts => ["localhost:9200"]

manage_template => false

index => "%{[@metadata][beat]}-%{[@metadata][version]}-%{+YYYY.MM.dd}"

document_type => "_doc"

}

}Add filtering rules for log processing in /etc/logstash/conf.d/10-syslog-filter.conf:

filter {

if [fileset][module] == "system" {

if [fileset][name] == "auth" {

grok {

match => { "message" => ["%{SYSLOGTIMESTAMP:[system][auth][timestamp]} %{IPORHOST:[system][auth][hostname]} sshd(?:\[%{POSINT:[system][auth][pid]}\])?: %{DATA:[system][auth][ssh][event]} %{DATA:[system][auth][ssh][method]} for (invalid user )?%{DATA:[system][auth][user]} from %{IPORHOST:[system][auth][ssh][ip]} port %{NUMBER:[system][auth][ssh][port]} ssh2"] }

}

}

}

}Test the configuration syntax before starting the service:

sudo -u logstash /usr/share/logstash/bin/logstash --path.settings /etc/logstash -tEnable and start Logstash:

sudo systemctl enable logstash

sudo systemctl start logstash

sudo systemctl status logstashMonitor Logstash logs to ensure successful startup and pipeline initialization:

sudo tail -f /var/log/logstash/logstash-plain.logInstalling and Configuring Kibana

Kibana serves as the visualization frontend for the ELK Stack. Install Kibana from the Elastic repository:

sudo dnf install -y --enablerepo=elasticsearch kibanaConfigure Kibana by editing /etc/kibana/kibana.yml. Set the server host and Elasticsearch connection parameters:

server.port: 5601

server.host: "0.0.0.0"

server.name: "elk-kibana"

elasticsearch.hosts: ["http://localhost:9200"]

elasticsearch.username: "kibana_system"

elasticsearch.password: "your-kibana-password"

logging.dest: /var/log/kibana/kibana.logCreate the Kibana log directory and set appropriate permissions:

sudo mkdir -p /var/log/kibana

sudo chown kibana:kibana /var/log/kibanaGenerate enrollment tokens for secure Kibana-Elasticsearch communication:

sudo /usr/share/elasticsearch/bin/elasticsearch-create-enrollment-token -s kibanaEnable and start Kibana service:

sudo systemctl enable kibana

sudo systemctl start kibana

sudo systemctl status kibanaAccess the Kibana web interface by navigating to http://your-server-ip:5601 in a web browser. Complete the initial setup wizard by entering the enrollment token and configuring authentication credentials.

Create initial index patterns to begin data visualization. Navigate to Stack Management > Index Patterns and create patterns matching your log indices, such as filebeat-* for Filebeat data or logstash-* for custom Logstash processing.

Implementing Security Best Practices

Security implementation protects sensitive log data and prevents unauthorized access to the ELK Stack infrastructure. Enable X-Pack security features that provide comprehensive authentication, authorization, and encryption capabilities.

Authentication and Authorization forms the foundation of ELK Stack security. Configure user accounts with appropriate roles and permissions to implement role-based access control (RBAC). Create dedicated users for different functions rather than relying on built-in administrative accounts:

sudo /usr/share/elasticsearch/bin/elasticsearch-users useradd log_reader -p log_reader_password -r kibana_user

sudo /usr/share/elasticsearch/bin/elasticsearch-users useradd log_admin -p log_admin_password -r kibana_adminEncryption Configuration secures data in transit between ELK Stack components. Generate SSL/TLS certificates for HTTPS communication:

sudo /usr/share/elasticsearch/bin/elasticsearch-certutil ca

sudo /usr/share/elasticsearch/bin/elasticsearch-certutil cert --ca elastic-stack-ca.p12Update Elasticsearch configuration to enable SSL:

xpack.security.enabled: true

xpack.security.transport.ssl.enabled: true

xpack.security.transport.ssl.keystore.path: certs/elastic-certificates.p12

xpack.security.transport.ssl.truststore.path: certs/elastic-certificates.p12Network Security restricts access to ELK Stack components. Configure firewall rules to allow connections only from trusted sources:

sudo firewall-cmd --permanent --add-rich-rule="rule family='ipv4' source address='10.0.0.0/8' port protocol='tcp' port='9200' accept"

sudo firewall-cmd --permanent --add-rich-rule="rule family='ipv4' source address='192.168.0.0/16' port protocol='tcp' port='5601' accept"

sudo firewall-cmd --reloadAccess Control mechanisms prevent unauthorized administrative access. Set strong passwords following enterprise security policies. Passwords should include uppercase and lowercase letters, numbers, and special characters with minimum 12-character length. Implement password rotation policies requiring updates every 90 days.

Regular security audits help maintain protection levels. Enable Elasticsearch audit logging to track user activities and system access patterns. Configure audit logs in /etc/elasticsearch/elasticsearch.yml:

xpack.security.audit.enabled: true

xpack.security.audit.outputs: [index, logfile]

xpack.security.audit.logfile.events.include: [access_granted, access_denied, authentication_failed]Installing and Configuring Filebeat

Filebeat provides lightweight log shipping capabilities that complement the ELK Stack by efficiently collecting and forwarding log files from various sources. This component serves as the primary data collection agent in most ELK deployments, offering better performance and resource utilization compared to traditional log forwarding methods.

Install Filebeat from the Elastic repository:

sudo dnf install -y --enablerepo=elasticsearch filebeatConfigure Filebeat by editing /etc/filebeat/filebeat.yml. Define log file paths and enable relevant modules:

filebeat.inputs:

- type: log

enabled: true

paths:

- /var/log/*.log

- /var/log/messages

- /var/log/secure

filebeat.config.modules:

path: ${path.config}/modules.d/*.yml

reload.enabled: false

output.logstash:

hosts: ["localhost:5044"]

processors:

- add_host_metadata:

when.not.contains.tags: forwardedEnable system and security log modules for comprehensive monitoring:

sudo filebeat modules enable system

sudo filebeat modules enable securityTest the configuration and initialize index templates:

sudo filebeat test config

sudo filebeat test output

sudo filebeat setup --index-management -E output.logstash.enabled=false -E 'output.elasticsearch.hosts=["localhost:9200"]'Enable and start Filebeat service:

sudo systemctl enable filebeat

sudo systemctl start filebeat

sudo systemctl status filebeatMonitor Filebeat logs to confirm successful log shipping:

sudo tail -f /var/log/filebeat/filebeat.logVerify data flow by checking Elasticsearch indices for incoming log data:

curl -X GET "localhost:9200/_cat/indices?v"Testing and Verification

Comprehensive testing ensures all ELK Stack components function correctly and data flows through the complete pipeline without errors. Component Testing begins with individual service verification.

Verify Elasticsearch cluster health and functionality:

curl -X GET "localhost:9200/_cluster/health?pretty"

curl -X GET "localhost:9200/_cat/nodes?v"

curl -X GET "localhost:9200/_cat/indices?v"Healthy clusters display green status with all primary and replica shards allocated successfully. Node listings should show all configured nodes as active participants in the cluster.

Test Logstash pipeline functionality by examining processing statistics:

curl -X GET "localhost:9600/_node/stats/pipeline?pretty"Pipeline statistics reveal input rates, filter processing times, and output delivery success rates. Consistent processing rates indicate proper pipeline operation.

Confirm Kibana web interface accessibility and Elasticsearch connectivity. Log into Kibana and navigate to Stack Monitoring to view component health dashboards. Successfully loading dashboards confirms proper inter-component communication.

Data Flow Verification tracks log entries from source to visualization. Generate test log entries:

echo "Test log entry $(date)" >> /var/log/test.log

logger "Test syslog message from ELK stack verification"Monitor Filebeat logs for successful harvesting:

sudo grep "Harvester started" /var/log/filebeat/filebeat.logCheck Logstash processing logs for input reception and output delivery:

sudo grep "beats" /var/log/logstash/logstash-plain.logVerify data storage in Elasticsearch by querying recent indices:

curl -X GET "localhost:9200/filebeat-*/_search?q=message:test&size=5&pretty"End-to-End Testing validates the complete log management workflow. Create comprehensive test scenarios that simulate real-world logging patterns. Generate various log types including system logs, application logs, and security events. Monitor processing latency from log generation to Kibana visualization availability.

Access Kibana and create index patterns matching your test data. Build simple visualizations to confirm data accessibility and proper field parsing. Successful visualization creation indicates complete pipeline functionality.

Common Troubleshooting Issues

ELK Stack deployments may encounter various challenges during installation and operation. Understanding common issues and their solutions accelerates problem resolution and minimizes downtime.

Elasticsearch Issues frequently involve memory and configuration problems. Insufficient heap memory causes frequent garbage collection and poor performance. Monitor heap utilization:

curl -X GET "localhost:9200/_nodes/stats/jvm?pretty"If heap usage consistently exceeds 75%, increase heap size in /etc/elasticsearch/jvm.options. Never allocate more than 50% of system RAM to Elasticsearch heap, as the remaining memory supports file system caching.

Cluster health issues often result from misconfigured discovery settings or network connectivity problems. Check cluster status:

curl -X GET "localhost:9200/_cluster/allocation/explain?pretty"Unassigned shards typically indicate node connectivity issues or insufficient cluster resources. Review network configuration and ensure all nodes can communicate on required ports.

Index mapping conflicts occur when different data types are assigned to the same field across documents. Examine mapping issues:

curl -X GET "localhost:9200/your-index/_mapping?pretty"Resolve mapping conflicts by creating explicit index templates or transforming data during Logstash processing.

Logstash Problems commonly involve pipeline configuration errors and resource bottlenecks. Configuration syntax errors prevent pipeline startup. Test configurations before deployment:

sudo -u logstash /usr/share/logstash/bin/logstash --path.settings /etc/logstash -tDetailed error messages identify specific configuration issues requiring correction.

Performance bottlenecks manifest as increased processing latency and growing input queues. Monitor pipeline statistics:

curl -X GET "localhost:9600/_node/stats/pipeline?pretty"High queue utilization indicates insufficient processing capacity. Increase worker threads or optimize filter configurations to improve throughput.

Input/output connection issues disrupt data flow. Verify network connectivity between components and check authentication credentials. Review Logstash logs for connection error details.

Kibana Connection Issues prevent web interface access and data visualization. Authentication failures often result from incorrect username/password combinations or expired tokens. Reset passwords using Elasticsearch user management tools:

sudo /usr/share/elasticsearch/bin/elasticsearch-reset-password -u kibana_systemIndex pattern creation failures indicate Elasticsearch connectivity problems or missing indices. Verify Elasticsearch accessibility from Kibana and confirm index existence.

Dashboard loading errors suggest data parsing issues or field mapping conflicts. Check browser developer console for detailed error messages and review index field mappings.

Filebeat Connectivity problems interrupt log shipping and data collection. Service startup failures usually result from configuration file syntax errors. Validate configuration syntax:

sudo filebeat test configNetwork connectivity issues prevent log delivery to Logstash. Test Logstash connectivity:

sudo filebeat test outputPermission errors occur when Filebeat cannot access log files. Verify file permissions and user context:

sudo ls -la /var/log/

sudo systemctl status filebeatLog harvesting problems indicate incorrect file path specifications or file rotation conflicts. Review Filebeat logs for detailed error information and adjust path configurations accordingly.

Performance Optimization and Maintenance

Ongoing optimization ensures ELK Stack deployments maintain optimal performance as data volumes and query complexity increase. Elasticsearch Optimization focuses on index lifecycle management and resource allocation.

Index lifecycle management (ILM) policies automatically manage index aging and storage optimization:

curl -X PUT "localhost:9200/_ilm/policy/elk-policy" -H 'Content-Type: application/json' -d'

{

"policy": {

"phases": {

"hot": {

"actions": {

"rollover": {

"max_size": "50gb",

"max_age": "7d"

}

}

},

"warm": {

"min_age": "7d",

"actions": {

"shrink": {

"number_of_shards": 1

}

}

},

"delete": {

"min_age": "90d"

}

}

}

}

'Shard allocation strategies balance storage and query performance. Avoid over-sharding by calculating appropriate shard sizes based on data volume and query patterns. Target shard sizes between 10GB-50GB for optimal performance.

Memory and storage optimization includes proper heap sizing, SSD storage utilization, and efficient query patterns. Monitor query performance and identify expensive operations:

curl -X GET "localhost:9200/_nodes/stats/indices/search?pretty"System Resource Management involves comprehensive monitoring of CPU, memory, and network utilization. Implement monitoring solutions that track resource consumption trends and identify capacity planning requirements.

CPU utilization should remain below 80% during normal operations. Higher utilization may indicate inadequate hardware resources or inefficient query patterns requiring optimization.

Memory management includes both JVM heap monitoring and file system cache utilization. Elasticsearch relies heavily on file system caching for query performance. Maintain adequate free system memory to support effective caching.

Network bandwidth considerations become critical in multi-node deployments with high data ingestion rates. Monitor network utilization and implement dedicated network infrastructure for ELK Stack communication when necessary.

Regular Maintenance Tasks ensure long-term system stability and security. Establish log retention policies that balance storage costs with operational requirements. Implement automated cleanup procedures for aged indices and archived data.

Security updates and patching require regular attention to maintain protection against evolving threats. Subscribe to Elastic security notifications and establish testing procedures for update deployment. Schedule maintenance windows for applying critical security patches.

Backup and disaster recovery planning protects against data loss and system failures. Implement regular snapshots of Elasticsearch indices:

curl -X PUT "localhost:9200/_snapshot/backup_repository" -H 'Content-Type: application/json' -d'

{

"type": "fs",

"settings": {

"location": "/var/backups/elasticsearch"

}

}

'Scaling Considerations address growing data volumes and user requirements. Horizontal scaling involves adding additional Elasticsearch nodes to distribute storage and processing loads. Plan node addition procedures and test cluster expansion in development environments.

Load balancing strategies distribute query loads across multiple Kibana instances for high-availability deployments. Implement reverse proxy solutions to manage user connections and provide failover capabilities.

High availability configuration includes node redundancy, data replication, and automated failover mechanisms. Design cluster architectures that survive individual node failures without service interruption.

Congratulations! You have successfully installed ELK Stack. Thanks for using this tutorial for installing the ELK Stack on your AlmaLinux OS 10 system. For additional help or useful information, we recommend you check the official ELK Stack website.