How To Install ELK Stack on Linux Mint 22

In this tutorial, we will show you how to install ELK Stack on Linux Mint 22. The ELK stack has become an essential tool for system administrators and developers alike. It allows for efficient log collection, processing, and visualization, making it easier to troubleshoot issues and gain insights from your system’s data. Linux Mint users can leverage this powerful toolset to enhance their system monitoring and analysis capabilities.

Prerequisites

Before we begin the installation process, ensure that your system meets the following requirements:

- Linux Mint 22 (based on Ubuntu)

- Minimum 4GB RAM (8GB recommended for production use)

- At least 2 CPU cores

- Sudo privileges on your system

Additionally, you’ll need to install the following software:

- OpenJDK (Java Development Kit)

- Nginx web server

Understanding ELK Components

The ELK stack consists of three main components, each serving a specific purpose in the log management process:

Elasticsearch

Elasticsearch is a distributed, RESTful search and analytics engine. It serves as the central component of the ELK stack, storing and indexing the log data for quick retrieval and analysis.

Logstash

Logstash is a data processing pipeline that ingests, transforms, and forwards data to Elasticsearch. It can handle various input sources and apply filters to structure and enrich the data before sending it to Elasticsearch.

Kibana

Kibana is a web-based visualization platform that works with Elasticsearch. It provides a user-friendly interface for exploring, visualizing, and analyzing the data stored in Elasticsearch.

System Preparation

Before installing the ELK stack, we need to prepare our Linux Mint 22 system. Follow these steps to ensure a smooth installation process:

Update System Packages

First, update your system’s package list and upgrade existing packages:

sudo apt update

sudo apt upgrade -y

Install Dependencies

Install the necessary dependencies, including OpenJDK:

sudo apt install default-jdk nginx -y

Configure Firewall Settings

If you have UFW (Uncomplicated Firewall) enabled, allow traffic on the necessary ports:

sudo ufw allow 22/tcp

sudo ufw allow 80/tcp

sudo ufw allow 5601/tcp

sudo ufw allow 9200/tcp

sudo ufw allow 5044/tcp

Installing Elasticsearch

Now that our system is prepared, let’s start by installing Elasticsearch:

Add Elasticsearch Repository

First, import the Elasticsearch public GPG key:

wget -qO - https://artifacts.elastic.co/GPG-KEY-elasticsearch | sudo apt-key add -

Add the Elasticsearch repository to your system:

echo "deb https://artifacts.elastic.co/packages/7.x/apt stable main" | sudo tee /etc/apt/sources.list.d/elastic-7.x.list

Install Elasticsearch

Update the package list and install Elasticsearch:

sudo apt update

sudo apt install elasticsearch

Configure Elasticsearch

Edit the Elasticsearch configuration file:

sudo nano /etc/elasticsearch/elasticsearch.yml

Uncomment and modify the following lines:

network.host: localhost

http.port: 9200

Start and Enable Elasticsearch

Start the Elasticsearch service and enable it to run at boot:

sudo systemctl start elasticsearch

sudo systemctl enable elasticsearch

Installing Logstash

With Elasticsearch up and running, let’s move on to installing Logstash:

Install Logstash

Install Logstash using the following command:

sudo apt install logstash

Configure Logstash

Create a simple Logstash configuration file to test the installation:

sudo nano /etc/logstash/conf.d/01-beats-input.conf

Add the following content:

input {

beats {

port => 5044

}

}

output {

elasticsearch {

hosts => ["localhost:9200"]

index => "%{[@metadata][beat]}-%{[@metadata][version]}-%{+YYYY.MM.dd}"

}

}

Start and Enable Logstash

Start the Logstash service and enable it to run at boot:

sudo systemctl start logstash

sudo systemctl enable logstash

Installing Kibana

The final component of the ELK stack is Kibana. Let’s install and configure it:

Install Kibana

Install Kibana using the following command:

sudo apt install kibana

Configure Kibana

Edit the Kibana configuration file:

sudo nano /etc/kibana/kibana.yml

Uncomment and modify the following lines:

server.port: 5601

server.host: "localhost"

elasticsearch.hosts: ["http://localhost:9200"]

Start and Enable Kibana

Start the Kibana service and enable it to run at boot:

sudo systemctl start kibana

sudo systemctl enable kibana

Configuring Nginx Reverse Proxy

To securely access Kibana from a web browser, we’ll set up Nginx as a reverse proxy:

Create Nginx Server Block

Create a new Nginx server block configuration:

sudo nano /etc/nginx/sites-available/kibana

Add the following content, replacing your_domain.com with your actual domain name:

server {

listen 80;

server_name your_domain.com;

location / {

proxy_pass http://localhost:5601;

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection 'upgrade';

proxy_set_header Host $host;

proxy_cache_bypass $http_upgrade;

}

}

Enable the Nginx Configuration

Create a symbolic link to enable the Nginx configuration:

sudo ln -s /etc/nginx/sites-available/kibana /etc/nginx/sites-enabled/

Test and Restart Nginx

Test the Nginx configuration for any syntax errors:

sudo nginx -t

If there are no errors, restart Nginx:

sudo systemctl restart nginx

System Integration

Now that all components are installed and configured, let’s ensure they’re properly integrated:

Verify Elasticsearch Connection

Check if Elasticsearch is running and accessible:

curl -X GET "localhost:9200"

You should see a JSON response with Elasticsearch version information.

Test Logstash Pipeline

Create a simple test input for Logstash:

echo "test log entry" | sudo tee /var/log/test.log

Configure Logstash to read this file:

sudo nano /etc/logstash/conf.d/02-beats-input.conf

Add the following content:

input {

file {

path => "/var/log/test.log"

start_position => "beginning"

}

}

output {

elasticsearch {

hosts => ["localhost:9200"]

index => "test-log-%{+YYYY.MM.dd}"

}

}

Restart Logstash to apply the changes:

sudo systemctl restart logstash

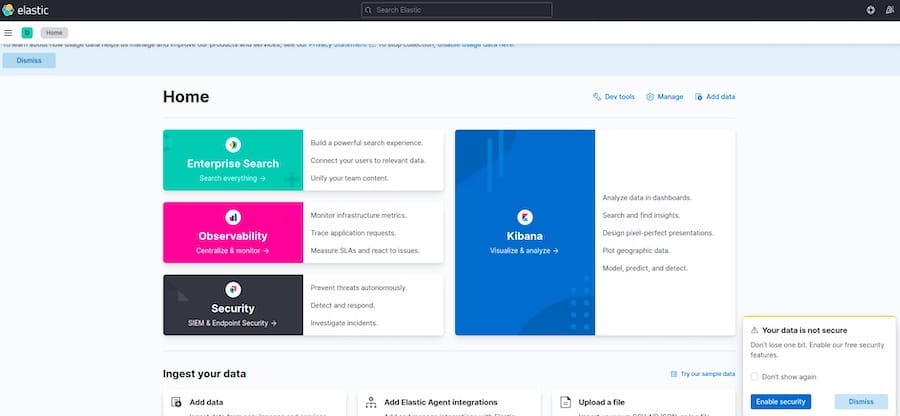

Testing and Verification

Let’s verify that our ELK stack is functioning correctly:

Access Kibana Web Interface

Open a web browser and navigate to http://your_domain.com. You should see the Kibana login page.

Create an Index Pattern

In Kibana, go to Management > Stack Management > Index Patterns. Create a new index pattern for the test log we created earlier (e.g., test-log-*).

Visualize Data

Go to the Discover tab in Kibana and select your newly created index pattern. You should see the test log entry we created earlier.

Troubleshooting Guide

If you encounter issues during the installation or configuration process, consider the following troubleshooting steps:

Check Service Status

Verify that all services are running:

sudo systemctl status elasticsearch

sudo systemctl status logstash

sudo systemctl status kibana

sudo systemctl status nginx

Review Log Files

Check the log files for each component:

sudo tail -f /var/log/elasticsearch/elasticsearch.log

sudo tail -f /var/log/logstash/logstash-plain.log

sudo tail -f /var/log/kibana/kibana.log

sudo tail -f /var/log/nginx/error.log

Verify Firewall Settings

Ensure that the necessary ports are open:

sudo ufw status

Congratulations! You have successfully installed ELK Stack. Thanks for using this tutorial for installing the ELK Stack open-source log analytics platform on Linux Mint 22 system. For additional or useful information, we recommend you check the official ELK Stack website.