How To Install ELK Stack on Manjaro

Centralized logging has become an essential component of modern system administration and application development. The ELK Stack—comprising Elasticsearch, Logstash, and Kibana—provides a powerful, open-source solution for log collection, processing, storage, and visualization. Manjaro Linux, with its rolling release model and user-friendly approach, offers an excellent platform for deploying this robust logging infrastructure. This comprehensive guide walks you through the entire process of installing and configuring the ELK Stack on Manjaro, ensuring you can effectively monitor your systems and applications with minimal effort.

Introduction to ELK Stack

The ELK Stack has emerged as the leading open-source solution for centralized logging and data analysis. This powerful combination of Elasticsearch, Logstash, and Kibana provides system administrators and developers with a comprehensive toolkit for collecting, processing, storing, and visualizing log data from diverse sources. Originally developed by Elastic (formerly Elasticsearch BV), the ELK Stack has evolved significantly since its inception, with each component continually receiving improvements and new features.

Elasticsearch serves as the foundation of the stack—a distributed, RESTful search and analytics engine built on Apache Lucene. It excels at indexing and searching through large volumes of textual data, making it ideal for log storage and retrieval. Logstash functions as the data processing pipeline, ingesting data from multiple sources simultaneously, transforming it according to specified rules, and sending it to designated outputs, primarily Elasticsearch. Kibana completes the stack by providing a flexible visualization layer that allows users to explore, analyze, and present their data through various charts, graphs, and dashboards.

The popularity of the ELK Stack stems from its flexibility, scalability, and comprehensive feature set. It can handle logs from virtually any source—whether system logs, application logs, or network data—and scales from single-server setups to massive distributed deployments. Manjaro Linux, with its rolling release model, up-to-date packages, and Arch Linux foundations, provides an excellent platform for deploying the ELK Stack. The combination of Manjaro’s stability and the ELK Stack’s capabilities creates a powerful environment for system monitoring, troubleshooting, and performance analysis.

Understanding ELK Stack Components

Before diving into the installation process, it’s crucial to understand each component of the ELK Stack in detail. This knowledge helps in configuring and optimizing your logging infrastructure according to your specific requirements.

Elasticsearch: The Core Search Engine

Elasticsearch functions as a distributed, document-oriented NoSQL database and full-text search engine. Built on Apache Lucene, it stores data in JSON format and provides near real-time search capabilities. Elasticsearch organizes data into indices—collections of documents with similar characteristics—which can be distributed across multiple nodes in a cluster for redundancy and performance. Its RESTful API allows for simple integration with various applications and services, enabling operations like indexing, searching, updating, and deleting documents via standard HTTP requests.

What sets Elasticsearch apart is its ability to handle massive datasets while maintaining impressive query performance. It achieves this through horizontal scaling, distributing data across multiple nodes and leveraging parallel processing. The distributed nature of Elasticsearch also ensures high availability and fault tolerance, as data can be replicated across multiple nodes. These features make Elasticsearch particularly well-suited for log analysis, where large volumes of data need to be ingested, stored, and queried efficiently.

Logstash: The Data Processing Pipeline

Logstash serves as the data collection and processing component of the ELK Stack. It operates on a pipeline architecture consisting of inputs, filters, and outputs. Inputs collect data from various sources, including log files, syslog, metrics, and HTTP endpoints. Filters transform and enrich the data, performing operations like parsing, timestamp conversion, field addition or removal, and data normalization. Outputs send the processed data to various destinations, with Elasticsearch being the most common but not the only option.

The flexibility of Logstash comes from its extensive plugin ecosystem, which includes over 200 plugins for various inputs, filters, and outputs. This allows for customized data processing pipelines tailored to specific use cases. For example, the Grok filter plugin enables pattern matching and structured parsing of unstructured log data, while the GeoIP filter can enrich IP addresses with geographical information. Despite its resource requirements being higher than lightweight alternatives like Filebeat, Logstash’s powerful data transformation capabilities make it invaluable for complex log processing scenarios.

Kibana: The Visualization Platform

Kibana provides the visualization and user interface layer of the ELK Stack. It connects to Elasticsearch and allows users to search, view, and interact with data stored in Elasticsearch indices. Through an intuitive web interface, Kibana enables the creation of various visualizations, including bar charts, line graphs, pie charts, heat maps, and geographical maps. These visualizations can be combined into dashboards that provide comprehensive views of specific metrics or aspects of your system.

Beyond basic visualizations, Kibana offers advanced features like Canvas for creating presentation-grade visualizations, Lens for drag-and-drop visualization building, and Dashboard Drilldowns for interactive exploration. It also includes tools for machine learning anomaly detection, alerting, and reporting. The Discover interface allows for direct querying and exploration of raw data, while Dev Tools provides a console for interacting directly with the Elasticsearch API. With its continual development and feature additions, Kibana has evolved from a simple visualization tool to a comprehensive analytics platform.

Beats: Lightweight Data Shippers

While not part of the original ELK acronym, Beats have become an integral part of the Elastic Stack (as the ELK Stack is now officially known). Beats are lightweight, single-purpose data shippers that collect specific types of data and forward them to Elasticsearch directly or via Logstash. The most commonly used Beat is Filebeat, which specializes in harvesting log files and forwarding them for processing. Other Beats include Metricbeat for metrics collection, Packetbeat for network packet analysis, Heartbeat for uptime monitoring, and Auditbeat for audit data collection.

The lightweight nature of Beats makes them ideal for deployment on edge nodes or client machines where resource usage needs to be minimized. By performing minimal processing at the collection point and offloading heavy processing to Logstash or Elasticsearch ingest nodes, Beats enable efficient distributed data collection. In a typical ELK deployment, Beats agents installed on various servers collect and forward logs to a central Logstash instance for processing before storage in Elasticsearch.

System Requirements for ELK on Manjaro

The ELK Stack can be resource-intensive, particularly when handling large volumes of data. Understanding the system requirements helps ensure smooth operation and optimal performance. These requirements vary based on the scale of your deployment and the volume of data you plan to process.

Hardware Requirements

For a development environment or small-scale deployment on Manjaro, a minimum of 4GB RAM is necessary, though 8GB or more is recommended for better performance. Elasticsearch, in particular, benefits from additional memory, as it uses RAM for caching and query execution. CPU requirements depend on the complexity of your data processing and query workloads—a modern multi-core processor (4+ cores) provides better performance for concurrent operations. Storage requirements grow with your data retention needs; starting with at least 50GB of free disk space is advisable, with consideration for expansion as your log volume increases.

For production environments or larger deployments, hardware requirements increase significantly. Consider 16GB+ RAM, 8+ CPU cores, and hundreds of gigabytes of storage, potentially distributed across multiple nodes. SSD storage is highly recommended for Elasticsearch data directories, as it significantly improves indexing and search performance compared to traditional HDDs.

Network Requirements

The ELK Stack components communicate over specific network ports that need to be accessible for proper functionality. Elasticsearch uses ports 9200 for HTTP REST API access and 9300 for node-to-node communication. Kibana requires port 5601 for its web interface, while Logstash typically uses port 5044 for Beats input, 9600 for monitoring API, and potentially other ports depending on configured inputs and outputs. If deploying across multiple machines, ensure these ports are open in your firewall configuration and network security groups.

Bandwidth considerations become important in distributed deployments or when collecting logs from multiple remote sources. Sufficient network bandwidth prevents bottlenecks in log transmission and ensures timely data processing and analysis. For high-volume environments, consider network interface cards with 1Gbps+ capacity and low-latency connections between ELK Stack components.

Software Prerequisites

Manjaro provides an excellent foundation for the ELK Stack with its up-to-date packages and rolling release model. The primary software prerequisite is Java, as Elasticsearch and Logstash are Java-based applications. OpenJDK 11 or newer is recommended, though some older versions of the ELK Stack may require Java 8. Additional prerequisites include standard system utilities like curl, wget, and tar for downloading and extracting packages, and systemd for service management.

Compatibility between ELK Stack components is crucial—all components should be from the same version to ensure proper functionality. Manjaro’s package management system simplifies keeping these dependencies updated, though careful attention to version compatibility during updates prevents potential issues.

Preparing Your Manjaro System

Proper system preparation lays the groundwork for a successful ELK Stack installation. This preparation ensures all prerequisites are met and the system is optimized for running the ELK components.

Updating the System Packages

Begin by ensuring your Manjaro system is fully updated. Open a terminal and execute the following commands:

sudo pacman -SyyuThis command synchronizes the package databases, updates the system packages, and refreshes the repository list. It’s crucial to start with an updated system to avoid compatibility issues and ensure access to the latest package versions.

Installing Java Requirements

Elasticsearch and Logstash require Java to run. Install OpenJDK on your Manjaro system with the following command:

sudo pacman -S jdk-openjdkAfter installation, verify the Java version by running:

java -versionThe output should confirm the installed Java version. For the ELK Stack 7.x or newer, Java 11 or higher is recommended. Set up the JAVA_HOME environment variable by adding the following lines to your ~/.bashrc file:

export JAVA_HOME=/usr/lib/jvm/default export PATH=$PATH:$JAVA_HOME/binApply these changes immediately by running:

source ~/.bashrcSetting Up User Permissions

For better security and service management, create dedicated users for the ELK components. Execute the following commands:

sudo groupadd elasticsearch sudo useradd -g elasticsearch elasticsearch sudo groupadd logstash sudo useradd -g logstash logstash sudo groupadd kibana sudo useradd -g kibana kibanaCreate the necessary directories and set appropriate permissions:

sudo mkdir -p /var/lib/elasticsearch sudo mkdir -p /var/log/elasticsearch sudo chown -R elasticsearch:elasticsearch /var/lib/elasticsearch sudo chown -R elasticsearch:elasticsearch /var/log/elasticsearch sudo mkdir -p /var/lib/logstash sudo mkdir -p /var/log/logstash sudo chown -R logstash:logstash /var/lib/logstash sudo chown -R logstash:logstash /var/log/logstash sudo mkdir -p /var/lib/kibana sudo mkdir -p /var/log/kibana sudo chown -R kibana:kibana /var/lib/kibana sudo chown -R kibana:kibana /var/log/kibanaConfiguring System Limits

Elasticsearch requires specific system limits for optimal performance. Create a file named /etc/security/limits.d/elasticsearch.conf with the following content:

elasticsearch soft nofile 65535 elasticsearch hard nofile 65535 elasticsearch soft nproc 4096 elasticsearch hard nproc 4096Additionally, adjust the virtual memory settings by editing /etc/sysctl.conf and adding:

vm.max_map_count=262144Apply these changes immediately with:

sudo sysctl -pThese adjustments prevent common issues like “too many open files” errors and ensure Elasticsearch can allocate sufficient memory maps for its indices.

Installing and Configuring Elasticsearch

Elasticsearch forms the foundation of the ELK Stack, providing powerful search and analytics capabilities. Installing and properly configuring Elasticsearch is crucial for the overall performance of your logging infrastructure.

Installation Methods

On Manjaro, you can install Elasticsearch using the Arch User Repository (AUR). First, ensure you have an AUR helper like yay installed:

sudo pacman -S yayThen, install Elasticsearch using yay:

yay -S elasticsearchAlternatively, you can download and install Elasticsearch manually from the official Elastic website:

wget https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-7.16.2-linux-x86_64.tar.gz tar -xzf elasticsearch-7.16.2-linux-x86_64.tar.gz sudo mv elasticsearch-7.16.2 /usr/share/elasticsearch sudo ln -s /usr/share/elasticsearch/bin/elasticsearch /usr/bin/elasticsearchIf you choose the manual installation method, create the necessary service files for systemd management.

Configuration Essentials

The main Elasticsearch configuration file is located at /etc/elasticsearch/elasticsearch.yml. Edit this file to customize your Elasticsearch installation:

sudo nano /etc/elasticsearch/elasticsearch.ymlFor a basic single-node setup, configure the following settings:

cluster.name: elk-cluster node.name: node-1 path.data: /var/lib/elasticsearch path.logs: /var/log/elasticsearch network.host: 0.0.0.0 http.port: 9200 discovery.type: single-nodeSetting network.host to 0.0.0.0 allows Elasticsearch to listen on all network interfaces, making it accessible from other machines on your network. The discovery.type setting configures Elasticsearch for single-node operation, bypassing the cluster formation process which is unnecessary for standalone deployments.

Memory Optimization

Elasticsearch’s performance heavily depends on available memory. Configure JVM heap size by editing /etc/elasticsearch/jvm.options:

sudo nano /etc/elasticsearch/jvm.optionsLocate the following lines and adjust them according to your system’s available memory:

-Xms1g -Xmx1gA good rule of thumb is to allocate 50% of available system memory to Elasticsearch, but not more than 32GB due to Java garbage collection inefficiencies beyond this threshold. For a system with 8GB RAM, suitable settings would be:

-Xms4g -Xmx4gEnsure both values are identical to prevent heap resizing during operation, which can impact performance.

Starting and Enabling Elasticsearch Service

Start the Elasticsearch service and enable it to run at system boot:

sudo systemctl start elasticsearch.service

sudo systemctl enable elasticsearch.serviceVerify that Elasticsearch is running correctly:

sudo systemctl status elasticsearch.serviceYou should see output indicating that the service is active and running.

Testing the Installation

To verify that Elasticsearch is functioning properly, use curl to query the REST API:

curl -X GET "localhost:9200/"You should receive a JSON response containing information about your Elasticsearch instance, including the cluster name, node name, and version number. Additionally, check the cluster health:

curl -X GET "localhost:9200/_cluster/health?pretty"The response should indicate a status of “green” or “yellow” (yellow is normal for single-node clusters, as some shards cannot be replicated).

Installing and Configuring Logstash

Logstash processes and transforms data before sending it to Elasticsearch. Its flexible pipeline architecture allows for complex data processing workflows tailored to specific requirements.

Installation Process

Install Logstash using the AUR helper:

yay -S logstashAlternatively, download and install Logstash manually:

wget https://artifacts.elastic.co/downloads/logstash/logstash-7.16.2-linux-x86_64.tar.gz tar -xzf logstash-7.16.2-linux-x86_64.tar.gz sudo mv logstash-7.16.2 /usr/share/logstash sudo ln -s /usr/share/logstash/bin/logstash /usr/bin/logstashFor manual installations, create appropriate systemd service files for service management. The Logstash directory structure consists of the bin directory containing executable files, config for configuration files, data for persistent data storage, and logs for log files.

Configuring Logstash

Logstash configurations are stored in /etc/logstash/conf.d/. Create a basic pipeline configuration file:

sudo mkdir -p /etc/logstash/conf.d sudo nano /etc/logstash/conf.d/basic.confAdd the following configuration to create a simple pipeline that collects system logs and forwards them to Elasticsearch:

input { file { path => "/var/log/syslog" type => "syslog" start_position => "beginning" } beats { port => 5044 host => "0.0.0.0" } } filter { if [type] == "syslog" { grok { match => { "message" => "%{SYSLOGTIMESTAMP:syslog_timestamp} %{SYSLOGHOST:syslog_hostname} %{DATA:syslog_program}(?:$$%{POSINT:syslog_pid}$$)?: %{GREEDYDATA:syslog_message}" } } date { match => [ "syslog_timestamp", "MMM d HH:mm:ss", "MMM dd HH:mm:ss" ] } } } output { elasticsearch { hosts => ["localhost:9200"] index => "logstash-%{+YYYY.MM.dd}" } stdout { codec => rubydebug } }This configuration collects logs from /var/log/syslog and also listens for Beats data on port 5044. The filter section uses the Grok filter to parse syslog messages into structured fields, and the date filter to convert timestamps to a standard format. The output section sends processed data to Elasticsearch and also outputs to the console for debugging purposes.

Pipeline Optimization

For better performance, adjust Logstash’s pipeline settings in /etc/logstash/logstash.yml:

sudo nano /etc/logstash/logstash.ymlConfigure the following settings:

pipeline.workers: 2 pipeline.batch.size: 125 pipeline.batch.delay: 50 The workers setting determines the number of threads Logstash uses for filter and output processing, typically set to the number of CPU cores. The batch size controls how many events Logstash processes together, while batch delay sets the wait time in milliseconds before processing a partial batch. These settings balance throughput and resource usage based on your specific environment.

Starting and Enabling Logstash Service

Start and enable the Logstash service:

sudo systemctl start logstash.service

sudo systemctl enable logstash.serviceVerify the service status:

sudo systemctl status logstash.serviceThe output should indicate that Logstash is active and running.

Testing Logstash Configuration

To test your Logstash configuration before starting the service, use the --config.test_and_exit flag:

sudo -u logstash /usr/bin/logstash --path.settings /etc/logstash -f /etc/logstash/conf.d/basic.conf --config.test_and_exitFor more detailed testing and debugging, use the –debug flag:

sudo -u logstash /usr/bin/logstash --path.settings /etc/logstash -f /etc/logstash/conf.d/basic.conf --debugCheck the Logstash logs for any errors or warnings:

sudo tail -f /var/log/logstash/logstash-plain.logInstalling and Configuring Kibana

Kibana provides a user-friendly interface for visualizing and exploring data stored in Elasticsearch. Its installation and configuration complete the ELK Stack setup.

Installation Steps

Install Kibana using the AUR helper:

yay -S kibanaAlternatively, download and install Kibana manually:

wget https://artifacts.elastic.co/downloads/kibana/kibana-7.16.2-linux-x86_64.tar.gz tar -xzf kibana-7.16.2-linux-x86_64.tar.gz sudo mv kibana-7.16.2-linux-x86_64 /usr/share/kibana sudo ln -s /usr/share/kibana/bin/kibana /usr/bin/kibanaIf installing manually, create appropriate systemd service files for Kibana. The Kibana directory structure includes config for configuration files, data for application data, and logs for log files.

Basic Configuration

Configure Kibana by editing its configuration file:

sudo nano /etc/kibana/kibana.ymlSet the following basic configuration options:

server.port: 5601 server.host: "0.0.0.0" server.name: "kibana" elasticsearch.hosts: ["http://localhost:9200"] logging.dest: /var/log/kibana/kibana.logSetting server.host to “0.0.0.0” allows Kibana to listen on all network interfaces, making it accessible from other machines on your network. The elasticsearch.hosts setting specifies the Elasticsearch instance Kibana should connect to.

Starting and Enabling Kibana Service

Start and enable the Kibana service:

sudo systemctl start kibana.service

sudo systemctl enable kibana.serviceVerify the service status:

sudo systemctl status kibana.serviceThe output should indicate that Kibana is active and running.

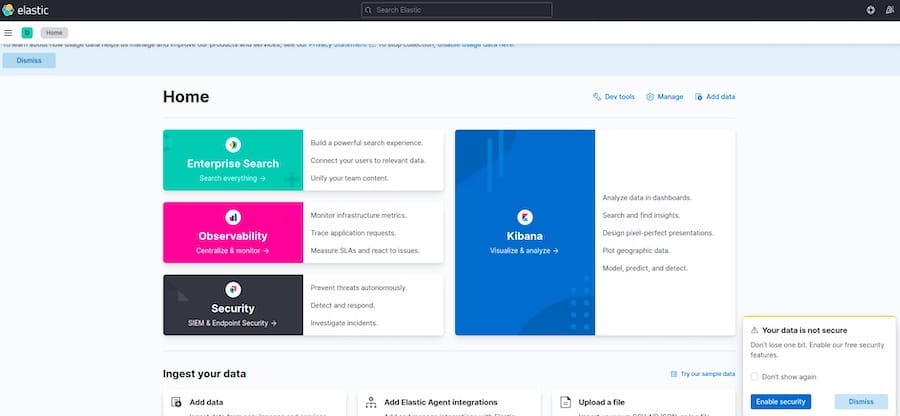

Accessing the Kibana Web Interface

Once Kibana is running, access its web interface by opening a web browser and navigating to:

http://localhost:5601If accessing from another machine, replace localhost with the IP address of your Manjaro system. The Kibana welcome page should load, guiding you through the initial setup process. Follow the prompts to create an index pattern, which tells Kibana which Elasticsearch indices to use for visualization. If you’ve configured Logstash as described earlier, your index pattern would be logstash-*.

After creating an index pattern, explore Kibana’s features through the main navigation menu. The Discover tab allows you to search and browse your data, while Visualize enables creation of various charts and graphs. Dashboard combines multiple visualizations into comprehensive monitoring displays.

Troubleshooting Kibana Service Issues

If Kibana fails to start or doesn’t load in the browser, check the service status and logs:

sudo systemctl status kibana.service

sudo tail -f /var/log/kibana/kibana.logCommon issues include incorrect Elasticsearch connection settings, port conflicts, or insufficient permissions. Ensure that Elasticsearch is running and accessible on the configured port, and that the Kibana user has appropriate permissions to its directories and files. If the service fails to start through systemd, try running Kibana directly to see detailed error messages:

sudo -u kibana /usr/bin/kibana --config /etc/kibana/kibana.ymlSetting Up a Secure ELK Stack

Security is crucial for any production ELK deployment. This section covers basic security measures to protect your ELK Stack from unauthorized access.

Basic Security Considerations

By default, the ELK Stack components have minimal security, potentially exposing sensitive log data to unauthorized users. At a minimum, implement network-level security by configuring your firewall to restrict access to ELK ports. For Manjaro, use UFW (Uncomplicated Firewall) or firewalld to allow access only from trusted IP addresses:

sudo pacman -S ufw

sudo ufw allow from 192.168.1.0/24 to any port 5601

sudo ufw allow from 192.168.1.0/24 to any port 9200

sudo ufw allow from 192.168.1.0/24 to any port 5044

sudo ufw enableThis configuration allows access to Kibana, Elasticsearch, and Logstash only from devices on the 192.168.1.0/24 subnet. Adjust the subnet according to your network configuration.

Implementing Authentication

For basic authentication, configure Elasticsearch’s built-in security features (available in the open-source distribution since version 7.x). First, enable security in elasticsearch.yml:

sudo nano /etc/elasticsearch/elasticsearch.ymlAdd the following settings:

xpack.security.enabled: true xpack.security.transport.ssl.enabled: trueRestart Elasticsearch and set up passwords for built-in users:

sudo systemctl restart elasticsearch.service sudo /usr/share/elasticsearch/bin/elasticsearch-setup-passwords interactiveFollow the prompts to set passwords for the elastic, kibana, logstash_system, and other built-in users. Then, update the Kibana configuration to use the kibana user:

sudo nano /etc/kibana/kibana.ymlAdd the following settings:

elasticsearch.username: "kibana" elasticsearch.password: "your_kibana_password"Similarly, update the Logstash configuration to use the elastic user when outputting to Elasticsearch:

sudo nano /etc/logstash/conf.d/basic.confModify the elasticsearch output section:

output { elasticsearch { hosts => ["localhost:9200"] index => "logstash-%{+YYYY.MM.dd}" user => "elastic" password => "your_elastic_password" } stdout { codec => rubydebug } }Installing and Configuring Nginx as a Reverse Proxy

A reverse proxy adds an additional security layer and enables SSL encryption for Kibana access. Install Nginx on Manjaro:

sudo pacman -S nginxCreate a configuration file for Kibana:

sudo nano /etc/nginx/sites-available/kibanaAdd the following configuration:

server { listen 80; server_name kibana.local; # Replace with your domain or IP location / { proxy_pass http://localhost:5601; proxy_http_version 1.1; proxy_set_header Upgrade $http_upgrade; proxy_set_header Connection 'upgrade'; proxy_set_header Host $host; proxy_cache_bypass $http_upgrade; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_set_header X-Forwarded-Proto $scheme; } }Create a symbolic link to enable the site:

sudo mkdir -p /etc/nginx/sites-enabled sudo ln -s /etc/nginx/sites-available/kibana /etc/nginx/sites-enabled/kibanaTest the Nginx configuration and restart the service:

sudo nginx -t sudo systemctl restart nginx sudo systemctl enable nginxImplementing SSL/TLS Encryption

For secure HTTPS access to Kibana, implement SSL/TLS encryption. First, install Certbot for Let’s Encrypt certificates (for public servers) or generate self-signed certificates (for internal use):

sudo pacman -S certbot certbot-nginxFor a public server with a domain name, obtain Let’s Encrypt certificates:

sudo certbot --nginx -d kibana.yourdomain.comFor internal use with self-signed certificates:

sudo mkdir -p /etc/nginx/ssl sudo openssl req -x509 -nodes -days 365 -newkey rsa:2048 -keyout /etc/nginx/ssl/kibana.key -out /etc/nginx/ssl/kibana.crtUpdate the Nginx configuration to use SSL:

sudo nano /etc/nginx/sites-available/kibanaModify the configuration for SSL:

server { listen 80; server_name kibana.local; return 301 https://$server_name$request_uri; } server { listen 443 ssl; server_name kibana.local; ssl_certificate /etc/nginx/ssl/kibana.crt; ssl_certificate_key /etc/nginx/ssl/kibana.key; ssl_protocols TLSv1.2 TLSv1.3; ssl_prefer_server_ciphers on; ssl_ciphers ECDHE-RSA-AES256-GCM-SHA512:DHE-RSA-AES256-GCM-SHA512:ECDHE-RSA-AES256-GCM-SHA384:DHE-RSA-AES256-GCM-SHA384; location / { proxy_pass http://localhost:5601; proxy_http_version 1.1; proxy_set_header Upgrade $http_upgrade; proxy_set_header Connection 'upgrade'; proxy_set_header Host $host; proxy_cache_bypass $http_upgrade; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_set_header X-Forwarded-Proto $scheme; } }Restart Nginx to apply the changes:

sudo systemctl restart nginxCreating a Complete Logging Pipeline

With the ELK Stack installed and secured, you can now create a comprehensive logging pipeline to collect, process, and visualize logs from various sources.

Setting Up Filebeat on Client Machines

To collect logs from multiple machines, install Filebeat on each client. For Manjaro or other Arch-based systems:

yay -S filebeatConfigure Filebeat to collect system logs and forward them to your Logstash instance:

sudo nano /etc/filebeat/filebeat.ymlUpdate the configuration:

filebeat.inputs: - type: log enabled: true paths: - /var/log/*.log - /var/log/syslog filebeat.config.modules: path: ${path.config}/modules.d/*.yml reload.enabled: false setup.kibana: host: "localhost:5601" output.logstash: hosts: ["elk-server:5044"] ssl.enabled: false #ssl.certificate_authorities: ["/etc/filebeat/ca.crt"] #ssl.certificate: "/etc/filebeat/client.crt" #ssl.key: "/etc/filebeat/client.key"Replace “elk-server” with the IP address or hostname of your ELK server. Start and enable the Filebeat service:

sudo systemctl start filebeat.service sudo systemctl enable filebeat.serviceConfiguring Log Sources

Filebeat modules simplify the collection and parsing of common log formats. Enable modules for your specific applications:

sudo filebeat modules enable system nginx mysqlConfigure each module according to your environment:

sudo nano /etc/filebeat/modules.d/system.ymlAdjust paths and settings based on your system configuration. For custom application logs, create additional Filebeat inputs in filebeat.yml:

filebeat.inputs: - type: log enabled: true paths: - /var/log/*.log - /var/log/syslog - type: log enabled: true paths: - /path/to/your/application/logs/*.log fields: app_name: "your_application" fields_under_root: true multiline: pattern: '^[0-9]{4}-[0-9]{2}-[0-9]{2}' negate: true match: afterThe multiline configuration helps Filebeat correctly handle multi-line log entries like stack traces, combining them into single events.

Building Logstash Filters for Specific Log Formats

Create more sophisticated Logstash filters to parse and transform your log data. For example, to process Apache access logs:

sudo nano /etc/logstash/conf.d/apache.confAdd the following configuration:

filter { if [fields][app_name] == "apache" { grok { match => { "message" => "%{COMBINEDAPACHELOG}" } } date { match => [ "timestamp", "dd/MMM/yyyy:HH:mm:ss Z" ] } geoip { source => "clientip" } useragent { source => "agent" target => "user_agent" } } }This filter uses the Grok pattern for Apache logs, normalizes timestamps, adds geographical information for IP addresses, and parses user agent strings into structured data. Similar filters can be created for other log formats, building a comprehensive processing pipeline tailored to your specific logging needs.

Creating Kibana Visualizations

Once logs are flowing into Elasticsearch, use Kibana to create visualizations and dashboards. From the Kibana interface, navigate to Visualize -> Create visualization and select the appropriate visualization type for your data. Common visualization types include:

Area charts for time-based data showing trends over time, such as log volumes or error rates. Bar charts for comparing categorical data, like response codes or log levels. Pie charts for showing proportions, such as the distribution of log sources or error types. Heat maps for displaying patterns in dense data sets, particularly useful for identifying anomalies in large log volumes. Geographic maps for visualizing location-based data, ideal if your logs contain IP addresses that have been enriched with geographical information.

After creating individual visualizations, combine them into dashboards by navigating to Dashboard -> Create dashboard and adding your saved visualizations. Arrange them in a logical order and set appropriate time ranges and filters to create comprehensive monitoring displays.

Advanced ELK Stack Configurations

As your logging needs grow, consider advanced configurations to enhance performance, scalability, and functionality.

Cluster Setup and Scaling

For larger deployments, configure Elasticsearch as a multi-node cluster for improved performance and fault tolerance. Update the elasticsearch.yml configuration on each node:

cluster.name: elk-cluster node.name: node-1 # Unique for each node network.host: 0.0.0.0 http.port: 9200 discovery.seed_hosts: ["node1-ip", "node2-ip", "node3-ip"] cluster.initial_master_nodes: ["node-1", "node-2", "node-3"] node.master: true # Set to false for data-only nodes node.data: true # Set to false for master-only nodesDistribute different roles across nodes for optimal performance: master nodes for cluster management, data nodes for storing and searching data, ingest nodes for pre-processing, and coordinating nodes for handling client requests. This division of labor improves resource utilization and enables horizontal scaling.

Index Lifecycle Management

Implement index lifecycle management (ILM) to automatically manage indices as they age. Configure ILM policies in Elasticsearch:

PUT _ilm/policy/logs_policy { "policy": { "phases": { "hot": { "actions": { "rollover": { "max_age": "7d", "max_size": "50gb" } } }, "warm": { "min_age": "30d", "actions": { "shrink": { "number_of_shards": 1 }, "forcemerge": { "max_num_segments": 1 } } }, "cold": { "min_age": "60d", "actions": { "freeze": {} } }, "delete": { "min_age": "90d", "actions": { "delete": {} } } } } }This policy implements a hot-warm-cold-delete architecture that optimizes storage and performance based on the age of your log data. Recent logs remain in the “hot” phase for fast access, gradually moving through “warm” and “cold” phases with reduced storage requirements, before eventually being deleted after the retention period.

Performance Tuning

Fine-tune Elasticsearch performance by adjusting shard allocation, caching strategies, and query optimization. Configure shard allocation awareness to distribute shards across physical hardware:

cluster.routing.allocation.awareness.attributes: rack_id node.attr.rack_id: rack1Optimize cache usage by configuring appropriate sizes for field data and filter caches based on your available memory and query patterns. Use index templates to set optimal mappings and settings for new indices, improving search performance and storage efficiency:

PUT _template/logs_template { "index_patterns": ["logstash-*"], "settings": { "number_of_shards": 3, "number_of_replicas": 1, "refresh_interval": "5s" }, "mappings": { "properties": { "@timestamp": { "type": "date" }, "message": { "type": "text", "norms": false }, "host": { "type": "keyword" } } } }Troubleshooting Common Issues

Despite careful configuration, issues may arise with your ELK Stack deployment. This section addresses common problems and their solutions.

Memory-Related Issues

Elasticsearch frequently experiences memory-related problems, particularly “out of memory” errors or poor performance due to excessive garbage collection. If Elasticsearch fails with memory errors, check your JVM heap settings in jvm.options and ensure they’re appropriate for your available system memory. Monitor garbage collection metrics through Elasticsearch’s _nodes/stats API to identify and address inefficient memory usage patterns.

The bootstrap checks in Elasticsearch may prevent it from starting if system settings are incorrect. Verify that vm.max_map_count is set correctly in sysctl.conf and that the elasticsearch user has sufficient file descriptor limits in limits.conf. For production deployments, disable memory swapping either at the system level or through Elasticsearch’s configuration to prevent performance degradation.

Service Startup Failures

If Elasticsearch fails to start, check the logs at /var/log/elasticsearch/elasticsearch.log for specific error messages. Common issues include port conflicts, insufficient permissions, or incorrect configuration settings. Verify that the elasticsearch user has appropriate permissions to all required directories and files, and that no other service is using the configured ports.

For Kibana startup failures, examine /var/log/kibana/kibana.log for error messages. Common issues include connectivity problems with Elasticsearch, incorrect authentication credentials, or port conflicts. Try running Kibana directly from the command line with the --debug flag for more detailed error information.

Logstash configuration errors often prevent the service from starting. Use the --config.test_and_exit flag to validate your configuration files before starting the service, and check /var/log/logstash/logstash-plain.log for detailed error messages.

Data Ingestion Problems

If logs are not appearing in Elasticsearch, troubleshoot the data ingestion pipeline step by step. First, verify that log sources are generating data and that Filebeat (or other data shippers) is correctly configured to collect it. Check Filebeat logs at /var/log/filebeat/filebeat for any connection or configuration issues.

Next, ensure that Logstash is receiving data by monitoring the input plugins’ metrics through the Monitoring API or by adding stdout output for debugging. Examine Logstash’s filter configurations for any parsing errors that might be dropping or corrupting events. Finally, verify that the Elasticsearch output is correctly configured with the proper host, port, and authentication credentials.

Interface and Visualization Issues

If Kibana shows no data or visualizations don’t work as expected, start by checking that your index patterns are correctly configured to match your Elasticsearch indices. Verify that the time filter in Kibana is set to an appropriate range that includes your log data’s timestamps. Network connectivity issues between Kibana and Elasticsearch can also cause problems, particularly in distributed deployments or when using SSL/TLS encryption.

For visualization rendering problems, check your browser’s console for JavaScript errors and ensure that your browser is compatible with the current Kibana version. Some visualizations may require specific field types or data formats to render correctly, so verify that your log data meets these requirements.

Congratulations! You have successfully installed ELK Stack. Thanks for using this tutorial for installing the ELK Stack open-source log analytics platform on Manjaro system. For additional or useful information, we recommend you check the official ELK Stack website.