How To Install Open WebUI on Fedora 42

Open WebUI represents a significant advancement for AI enthusiasts and developers seeking to harness the power of large language models (LLMs) locally on their Fedora systems. This modern web interface transforms how users interact with AI models, offering a seamless experience without the constraints of cloud-based alternatives. With Fedora 42’s robust capabilities, installing Open WebUI provides a perfect environment for running sophisticated AI operations with enhanced privacy, customization options, and zero API costs.

This comprehensive guide explores multiple installation methods – including Snap, Docker, and Python – to help you successfully deploy Open WebUI on your Fedora 42 system. Whether you’re a developer, privacy advocate, or AI experimenter, this guide will navigate you through each step of the process, addressing potential challenges and optimizing your setup for peak performance.

Understanding Open WebUI and Ollama

Open WebUI functions as a sophisticated graphical front-end designed specifically for interacting with locally hosted large language models. This web interface serves as a bridge between users and powerful AI models, enabling intuitive conversations and complex AI operations through a clean, accessible interface.

The platform offers several key features that enhance the AI experience:

- A responsive chat interface for smooth interaction with models

- Comprehensive model management capabilities

- Customizable prompt templates for consistent outputs

- Multi-user support with authentication options

- Integration with various LLM backends, particularly Ollama

The Ollama Connection

Open WebUI works particularly well with Ollama, a lightweight framework for running LLMs locally. This partnership creates a powerful ecosystem where Ollama handles the technical aspects of model execution while Open WebUI delivers an intuitive interface for interactions. The combination effectively removes barriers to entry for working with sophisticated AI models.

For Fedora 42 users, this stack offers significant advantages over cloud-based alternatives:

- Complete data privacy with no information leaving your system

- Elimination of usage costs associated with commercial AI APIs

- Flexibility to customize models according to specific requirements

- Freedom from internet connectivity requirements

- Greater transparency in how models function and process data

This local AI approach proves particularly valuable for developers building AI-powered applications, users with strict privacy requirements, researchers experimenting with model capabilities, and hobbyists exploring AI without recurring expenses.

Prerequisites

Before installing Open WebUI on Fedora 42, ensuring your system meets certain requirements will guarantee a smooth experience. These prerequisites cover hardware capabilities, software dependencies, and system configurations that support optimal performance.

Hardware Requirements:

- CPU: A modern multi-core processor (minimum 4 cores recommended)

- RAM: At least 8GB for basic models; 16GB or more for larger models

- Storage: Minimum 20GB free space for application and models

- GPU: While optional, an NVIDIA GPU significantly improves performance

Software Requirements:

- Fedora 42 with current updates applied

- Terminal access with administrative privileges

- Basic understanding of command-line operations

- Python 3.11 or newer if using the Python installation method

- Docker if using the container installation method

Preliminary System Preparation:

Update your Fedora system to ensure all packages are current:

sudo dnf update -yVerify your system architecture and capabilities:

lscpu

free -h

df -hCheck network connectivity and firewall settings, as Open WebUI requires local network access to function properly. If you plan to access the interface from other devices on your network, you may need to adjust firewall rules:

sudo firewall-cmd --permanent --add-port=8080/tcp

sudo firewall-cmd --reloadWith these prerequisites satisfied, your Fedora 42 system is ready for Open WebUI installation through any of the methods detailed in the following sections.

Method 1: Installing Open WebUI via Snap

Snap packages offer a convenient way to install applications on Fedora 42, providing isolated environments with bundled dependencies. This approach simplifies the installation process for Open WebUI while ensuring compatibility across different system configurations.

Setting Up Snap on Fedora 42

Before installing Open WebUI, you’ll need to set up the Snap package manager if it’s not already available on your system:

sudo dnf install snapd -yAfter installation, enable the systemd unit that manages the main snap communication socket:

sudo systemctl enable --now snapd.socketCreate the symbolic link to enable classic snap support:

sudo ln -s /var/lib/snapd/snap /snapFor the changes to take full effect, restart your system:

sudo rebootInstalling Open WebUI via Snap Store

Once your system has rebooted with Snap properly configured, proceed with installing Open WebUI:

sudo snap install open-webuiThis command downloads and installs the latest stable version of Open WebUI, handling all dependencies automatically. The installation process typically takes a few minutes depending on your internet connection speed.

Post-Installation Configuration

After installation completes, verify that the service is running:

snap services open-webuiIf the service isn’t running automatically, start it with:

sudo snap start open-webuiThe Snap installation configures Open WebUI to run on port 8080 by default. Access the interface by opening a web browser and navigating to:

http://localhost:8080Advantages and Limitations

The Snap installation method offers several benefits:

- Simplified installation with automatic dependency management

- Isolated environment that doesn’t interfere with system packages

- Automatic updates when new versions are released

- Consistent behavior across different Fedora configurations

However, some limitations exist:

- Slightly higher resource usage due to containerization

- Potential permission issues when accessing external directories

- Less flexibility for custom configurations compared to other methods

This installation method works well for users seeking a straightforward setup with minimal system modification and automatic updates.

Method 2: Docker Installation

Docker provides a robust and flexible method for deploying Open WebUI on Fedora 42, ensuring consistency across different environments while isolating the application from your system. This approach is particularly useful for users who prefer containerized deployments or need to run multiple instances with different configurations.

Installing Docker on Fedora 42

First, ensure Docker is properly installed on your system:

sudo dnf -y install dnf-plugins-core

sudo dnf config-manager --add-repo https://download.docker.com/linux/fedora/docker-ce.repo

sudo dnf install docker-ce docker-ce-cli containerd.io docker-compose-pluginAfter installation, start and enable the Docker service:

sudo systemctl start docker

sudo systemctl enable dockerVerify Docker is running correctly:

sudo docker run hello-worldDeploying Open WebUI via Docker

With Docker properly configured, you can now pull and run the Open WebUI container:

sudo docker pull ghcr.io/open-webui/open-webui:mainThis command downloads the latest Open WebUI image from the GitHub Container Registry. Once downloaded, run the container with proper configuration:

sudo docker run -d -p 3000:8080 --add-host=host.docker.internal:host-gateway -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:mainThis command:

- Runs the container in detached mode (

-d) - Maps port 3000 on your host to port 8080 in the container

- Adds host.docker.internal mapping to allow container-to-host communication

- Creates a persistent volume for data storage

- Names the container “open-webui”

- Configures automatic restart

Connecting to Ollama

If you’re running Ollama on the same machine, you may need to specify the Ollama API base URL:

sudo docker run -d -p 3000:8080 --add-host=host.docker.internal:host-gateway -e OLLAMA_API_BASE_URL=http://host.docker.internal:11434/api -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:mainFor systems where the standard approach doesn’t work, try using the host network:

sudo docker run -d --network=host -v open-webui:/app/backend/data -e OLLAMA_BASE_URL=http://127.0.0.1:11434 --name open-webui --restart always ghcr.io/open-webui/open-webui:mainVerifying the Installation

After starting the container, Open WebUI should be accessible at:

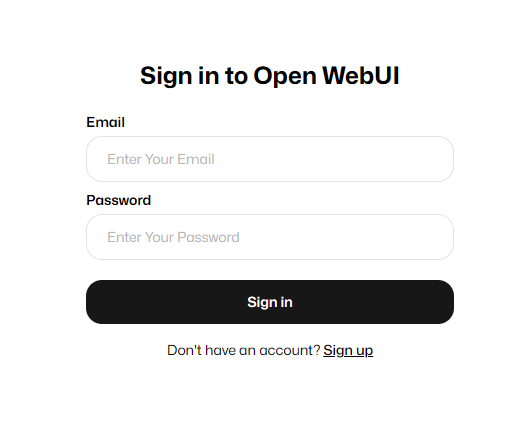

http://localhost:3000When you first access the interface, you’ll be prompted to create an administrator account. Complete this step to begin using Open WebUI.

Managing the Docker Container

To check the status of your container:

sudo docker ps -a | grep open-webuiTo view logs for troubleshooting:

sudo docker logs open-webuiTo stop, start, or restart the container:

sudo docker stop open-webui

sudo docker start open-webui

sudo docker restart open-webuiThe Docker installation method provides excellent isolation and consistency, making it an ideal choice for most Fedora 42 users implementing Open WebUI.

GPU Configuration for Open WebUI

Leveraging GPU acceleration dramatically improves the performance of large language models in Open WebUI, reducing response times and enabling the use of more sophisticated models. For Fedora 42 users with compatible NVIDIA hardware, configuring GPU support involves several key steps.

Installing NVIDIA Drivers on Fedora 42

First, ensure you have the proper NVIDIA drivers installed:

sudo dnf install akmod-nvidia xorg-x11-drv-nvidia-cudaAfter installation, reboot your system to activate the drivers:

sudo rebootVerify the drivers are properly installed and the GPU is detected:

nvidia-smiThis command should display information about your GPU, including the driver version and CUDA capability.

Installing NVIDIA Container Toolkit

To enable Docker containers to access your GPU, install the NVIDIA Container Toolkit:

sudo dnf install nvidia-container-toolkitConfigure the Docker daemon to use the NVIDIA runtime:

sudo mkdir -p /etc/docker

sudo tee /etc/docker/daemon.json <Restart Docker to apply these changes:

sudo systemctl restart dockerRunning Open WebUI with GPU Support

Now you can run Open WebUI with GPU acceleration using the CUDA-enabled image:

sudo docker run -d -p 3000:8080 --gpus all --add-host=host.docker.internal:host-gateway -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:cudaThis command specifies the --gpus all flag to make all GPUs available to the container and uses the CUDA-specific image variant.

Verifying GPU Utilization

To confirm that Open WebUI is utilizing your GPU:

nvidia-smi -l 1This command continuously monitors GPU usage. When interacting with models in Open WebUI, you should see increased GPU utilization and memory consumption.

Troubleshooting GPU Issues

If you encounter the error “could not select device driver ‘nvidia’ with capabilities: [[gpu]]”, ensure:

- NVIDIA drivers are properly installed

- NVIDIA Container Toolkit is correctly configured

- Docker has been restarted after configuration changes

- Your CUDA version is compatible with the container image

For users with older GPUs, you may need to use a specific CUDA version that supports your hardware. The CUDA 12.1 runtime version is generally reliable for most recent GPUs.

Properly configured GPU acceleration can provide 5-10x performance improvements for model inference, significantly enhancing the Open WebUI experience on Fedora 42.

Method 3: Python Installation

Installing Open WebUI using Python provides the most flexible and customizable approach, allowing direct integration with your Fedora 42 system. This method is particularly valuable for developers who may want to modify code or contribute to the project.

Setting Up the Python Environment

First, ensure you have Python 3.11 or newer installed on your Fedora 42 system:

python --versionIf the installed version is older than 3.11, install a compatible version:

sudo dnf install python3.11Next, install the essential development tools and libraries:

sudo dnf install gcc python3-develCreating a Virtual Environment

Using a virtual environment isolates the Open WebUI dependencies from your system Python:

# Using venv

python -m venv open-webui-env

source open-webui-env/bin/activate

# Alternatively, using Conda

conda create -n open-webui python=3.11

conda activate open-webuiInstalling Open WebUI via pip

With your virtual environment activated, install Open WebUI:

pip install open-webuiAlternatively, you can install directly from the GitHub repository for the latest development version:

pip install git+https://github.com/open-webui/open-webui.gitRunning Open WebUI

After installation, launch the Open WebUI server:

open-webuiThis starts the server on the default port 8080. To specify a different port or configuration:

open-webui --host 0.0.0.0 --port 9000Creating a Startup Service

For automatic startup with your system, create a systemd service:

sudo nano /etc/systemd/system/openwebui.serviceAdd the following content, adjusting paths as needed:

[Unit]

Description=Open WebUI Server

After=network.target

[Service]

Type=simple

User=your_username

WorkingDirectory=/path/to/your/directory

ExecStart=/path/to/open-webui-env/bin/open-webui

Restart=on-failure

[Install]

WantedBy=multi-user.targetEnable and start the service:

sudo systemctl enable openwebui.service

sudo systemctl start openwebui.serviceData Management

The Python installation stores data in the following locations:

- User data:

~/.local/share/open-webui/ - Configuration:

~/.config/open-webui/ - Logs:

~/.cache/open-webui/logs/

You can back up these directories to preserve your configuration and user data.

The Python installation method provides the deepest integration with your Fedora 42 system and the most control over configuration options, making it ideal for advanced users and developers.

Advanced Configuration Options

Open WebUI offers numerous configuration options to customize its behavior according to your specific requirements. These advanced settings allow you to tailor the security model, user access, and integration with other services.

Authentication Configuration

By default, Open WebUI implements user authentication. For single-user environments where security is less critical, you can disable authentication:

# Docker method

sudo docker run -d -p 3000:8080 -e WEBUI_AUTH=False --add-host=host.docker.internal:host-gateway -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:main

# Python method

export WEBUI_AUTH=False

open-webuiFor multi-user environments, keep authentication enabled and create separate accounts for each user through the admin interface.

Ollama Connection Configuration

To connect Open WebUI to a remote Ollama instance:

# Docker method

sudo docker run -d -p 3000:8080 -e OLLAMA_BASE_URL=http://your-ollama-server:11434 -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:main

# Python method

export OLLAMA_BASE_URL=http://your-ollama-server:11434

open-webuiFor systems where direct connection fails, implementing a reverse proxy with Nginx can resolve connectivity issues:

server {

listen 80;

server_name your_domain_or_ip;

location / {

proxy_pass http://localhost:11434;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

}

}Port Configuration

To change the default port:

# Docker method

sudo docker run -d -p 8888:8080 --add-host=host.docker.internal:host-gateway -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:main

# Python method

open-webui --port 8888Environment Variables

Open WebUI supports numerous environment variables for configuration:

WEBUI_AUTH: Enable/disable authenticationOLLAMA_BASE_URL: Specify Ollama server locationWEBUI_DB_PATH: Custom database locationWEBUI_BIND_ADDRESS: Interface binding addressWEBUI_PORT: Server port

Automated Startup with systemd

For reliable operation, configure Open WebUI to start automatically using systemd:

sudo nano /etc/systemd/system/openwebui.serviceCreate appropriate service definitions based on your installation method, then enable the service:

sudo systemctl enable openwebui.service

sudo systemctl start openwebui.serviceThese advanced configurations allow you to integrate Open WebUI seamlessly into your Fedora 42 environment while addressing specific security, performance, and accessibility requirements.

Installing and Configuring Ollama

Ollama serves as the backend engine for Open WebUI, handling the actual execution of language models. Proper installation and configuration of Ollama is essential for a fully functional Open WebUI setup on Fedora 42.

Installing Ollama

Download and install Ollama using the official script:

curl -fsSL https://ollama.com/install.sh | shAfter installation, start the Ollama service:

ollama serveConfiguring Ollama for Network Access

By default, Ollama only listens on localhost, which can cause connection issues with containerized instances of Open WebUI. To enable network access, modify the Ollama configuration:

sudo mkdir -p /etc/systemd/system/ollama.service.d/

sudo nano /etc/systemd/system/ollama.service.d/override.confAdd the following content to the configuration file:

[Service]

Environment="OLLAMA_HOST=0.0.0.0"

Environment="OLLAMA_ORIGINS=*"Reload the systemd configuration and restart Ollama:

sudo systemctl daemon-reload

sudo systemctl restart ollamaVerify that Ollama is properly configured by checking its API endpoint:

curl http://localhost:11434/api/versionYou should receive a JSON response with the Ollama version information.

Installing Language Models

Download and install language models through the command line:

ollama pull llama3

ollama pull mistral

ollama pull llama3:70bThe larger models require more RAM and storage space. For example, the llama3:70b model requires approximately 40GB of RAM to run effectively.

Testing Ollama-Open WebUI Integration

To verify that Ollama and Open WebUI are properly integrated:

- Access Open WebUI in your browser

- Check the models dropdown to confirm that installed models appear

- Select a model and send a test message

- Verify that the model processes the request and returns a response

If models don’t appear in Open WebUI, restart both services:

sudo systemctl restart ollama

sudo docker restart open-webui # For Docker installationsProper configuration of Ollama is critical for Open WebUI functionality. Most connection issues between the two services stem from network configuration problems that can be resolved by correctly setting the OLLAMA_HOST and OLLAMA_ORIGINS environment variables.

Troubleshooting Common Issues

Even with careful installation, users may encounter challenges when setting up Open WebUI on Fedora 42. This section addresses the most common issues and provides practical solutions to resolve them.

Connection Issues Between Open WebUI and Ollama

The most frequent problem users face is Open WebUI failing to connect to the Ollama backend. If you see the message “Connection Issue or Update Needed,” try these solutions:

- Verify Ollama is running and accessible:

curl http://localhost:11434/api/version - Check Ollama network configuration:

sudo nano /etc/systemd/system/ollama.service.d/override.confEnsure it contains:

[Service] Environment="OLLAMA_HOST=0.0.0.0" Environment="OLLAMA_ORIGINS=*" - For Docker installations, use the host network or correct URL:

sudo docker run -d --network=host -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:main - Implement an Nginx reverse proxy to resolve complex networking issues.

GPU Detection Problems

If Open WebUI fails to detect or utilize your GPU:

- Verify GPU drivers are properly installed:

nvidia-smi - Ensure NVIDIA Container Toolkit is installed:

sudo dnf install nvidia-container-toolkit - Check Docker configuration for GPU support:

sudo docker info | grep Runtimes - Try the CUDA-specific image with explicit GPU flags:

sudo docker run -d -p 3000:8080 --gpus all --add-host=host.docker.internal:host-gateway -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:cuda

Database and Storage Issues

Problems with the database can cause unexpected behavior:

- Check permissions on data directories:

ls -la /var/lib/docker/volumes/open-webui/_data - Reset the database (caution: this erases all user data):

sudo docker stop open-webui sudo docker rm open-webui sudo docker volume rm open-webui # Then reinstall Open WebUI

Port Conflicts

If the default ports are already in use:

- Check which services are using conflicting ports:

sudo ss -tulpn | grep ':8080\|:3000' - Use different ports when starting Open WebUI:

sudo docker run -d -p 9000:8080 --add-host=host.docker.internal:host-gateway -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:main

Log Analysis for Troubleshooting

When encountering persistent issues, check the logs:

# For Docker installations

sudo docker logs open-webui

# For Python installations

cat ~/.cache/open-webui/logs/open-webui.logLook for error messages that provide specific details about the problems you’re experiencing.

By systematically addressing these common issues, you can ensure a smooth Open WebUI experience on your Fedora 42 system.

Performance Optimization

Optimizing Open WebUI on Fedora 42 ensures smooth operation with large language models, reducing latency and improving overall responsiveness. These performance enhancements are particularly important when working with resource-intensive models.

Memory Management

Language models require significant memory for optimal performance:

- Increase available swap space for larger models:

sudo fallocate -l 16G /swapfile sudo chmod 600 /swapfile sudo mkswap /swapfile sudo swapon /swapfile - Modify model loading parameters to control memory usage:

ollama run llama3 --ram-limit 12000

CPU Optimization

For CPU-only systems:

- Set thread count to match your available cores:

export OMP_NUM_THREADS=$(nproc) - Enable CPU-specific optimizations:

export OLLAMA_CPU=1

GPU Performance Tuning

When using NVIDIA GPUs:

- Monitor GPU memory usage to prevent overflow:

watch -n 1 nvidia-smi - Adjust model quantization to balance performance and memory usage:

ollama pull llama3:8b-q4_0The

q4_0suffix indicates 4-bit quantization, which reduces memory requirements at a minor cost to quality.

Benchmarking Your Setup

Test your system’s performance to identify optimization opportunities:

ollama benchmark llama3:8bThis measures tokens per second, helping you compare different configurations and hardware setups.

By implementing these performance optimizations, your Open WebUI installation on Fedora 42 will deliver faster responses and support more concurrent users, even when running sophisticated language models.

Updating and Maintaining Open WebUI

Keeping Open WebUI and its components updated ensures access to the latest features, security patches, and performance improvements. This section outlines best practices for maintaining your Open WebUI installation on Fedora 42.

Checking for Updates

Regularly verify if updates are available:

# For Docker installations

sudo docker pull ghcr.io/open-webui/open-webui:main

# For Python installations

pip install --upgrade open-webuiUpdating Docker Installations

To update a Docker-based installation:

sudo docker stop open-webui

sudo docker rm open-webui

sudo docker pull ghcr.io/open-webui/open-webui:main

sudo docker run -d -p 3000:8080 --add-host=host.docker.internal:host-gateway -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:mainThis process preserves your data using the persistent volume while updating the application code.

Updating Ollama

Keep Ollama updated for compatibility with the latest Open WebUI features:

curl -fsSL https://ollama.com/install.sh | shThe installation script automatically updates Ollama to the latest version.

Backup Strategies

Before major updates, back up your configuration and data:

# For Docker volumes

sudo docker run --rm -v open-webui:/data -v $(pwd):/backup alpine tar czf /backup/open-webui-backup.tar.gz /data

# For Python installations

tar czf open-webui-backup.tar.gz ~/.local/share/open-webui/ ~/.config/open-webui/These backups can be restored if issues occur during updates.

Rollback Procedures

If an update causes problems, revert to a previous version:

# For Docker installations

sudo docker stop open-webui

sudo docker rm open-webui

sudo docker pull ghcr.io/open-webui/open-webui:previous-tag

sudo docker run -d -p 3000:8080 --add-host=host.docker.internal:host-gateway -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:previous-tagReplace previous-tag with the specific version tag you want to use.

Regular maintenance ensures your Open WebUI installation remains secure, stable, and equipped with the latest capabilities, enhancing your AI interaction experience on Fedora 42.

Congratulations! You have successfully installed Open WebUI. Thanks for using this tutorial for installing Open WebUI on the Fedora 42 Linux system. For additional help or useful information, we recommend you check the Open WebUI website.