In this tutorial, we will show you how to install Apache Spark on Ubuntu 20.04 LTS. For those of you who didn’t know, Apache Spark is a fast and general-purpose cluster computing system. It provides high-level APIs in Java, Scala, and Python, and also an optimized engine that supports overall execution charts. It also supports a rich set of higher-level tools including Spark SQL for SQL and structured information processing, MLlib for machine learning, GraphX for graph processing, and Spark Streaming.

This article assumes you have at least basic knowledge of Linux, know how to use the shell, and most importantly, you host your site on your own VPS. The installation is quite simple and assumes you are running in the root account, if not you may need to add ‘sudo‘ to the commands to get root privileges. I will show you the step-by-step installation of Apache Spark on a 20.04 LTS (Focal Fossa) server. You can follow the same instructions for Ubuntu 18.04, 16.04, and any other Debian-based distribution like Linux Mint.

Prerequisites

- A server running one of the following operating systems: Ubuntu 20.04, 18.04, 16.04, and any other Debian-based distribution like Linux Mint.

- It’s recommended that you use a fresh OS install to prevent any potential issues.

- A

non-root sudo useror access to theroot user. We recommend acting as anon-root sudo user, however, as you can harm your system if you’re not careful when acting as the root.

Install Apache Spark on Ubuntu 20.04 LTS Focal Fossa

Step 1. First, make sure that all your system packages are up-to-date by running the following apt commands in the terminal.

sudo apt update sudo apt upgrade

Step 2. Installing Java.

Apache Spark requires Java to run, let’s make sure we have Java installed on our Ubuntu system:

sudo apt install default-jdk

We check out the Java version, by command line below:

java -version

Step 3. Download and Install Apache Spark.

Download the latest release of Apache Spark from the downloads page:

wget https://www.apache.org/dyn/closer.lua/spark/spark-3.0.0/spark-3.0.0-bin-hadoop2.7.tgz tar xvzf spark-3.0.0-bin-hadoop2.7.tgz sudo mv spark-3.0.0-bin-hadoop2.7/ /opt/spark

Next, configure Apache Spark Environment:

nano ~/.bashrc

Next, add these lines to the end of the .bashrc file so that path can contain the Spark executable file path:

export SPARK_HOME=/opt/spark export PATH=$PATH:$SPARK_HOME/bin:$SPARK_HOME/sbin

Activate the changes:

source ~/.bashrc

Step 4. Start Standalone Spark Master Server.

Now that you have completed configuring your environment for Spark, you can start a master server:

start-master.sh

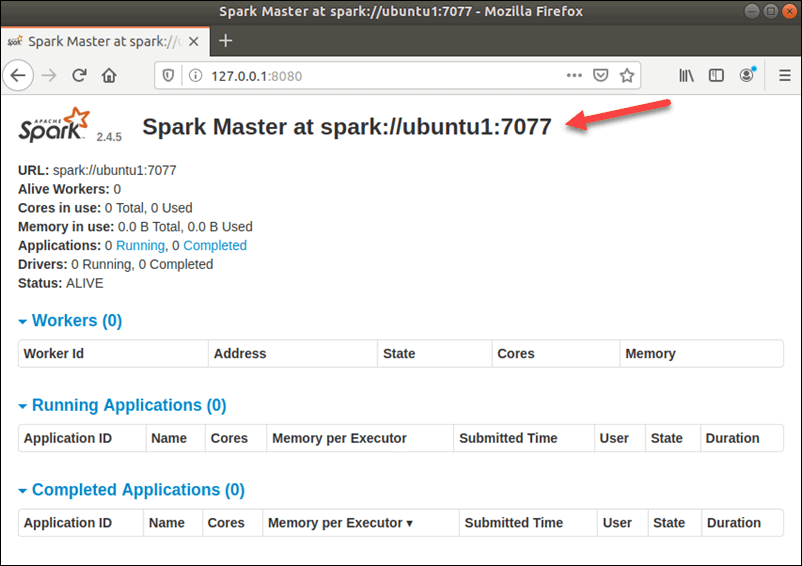

To view the Spark Web user interface, open a web browser and enter the localhost IP address on port 8080:

http://127.0.0.1:8080/

In this single-server, standalone setup, we will start one slave server along with the master server. The start-slave.sh command is used to start the Spark Worker Process:

start-slave.sh spark://ubuntu1:7077

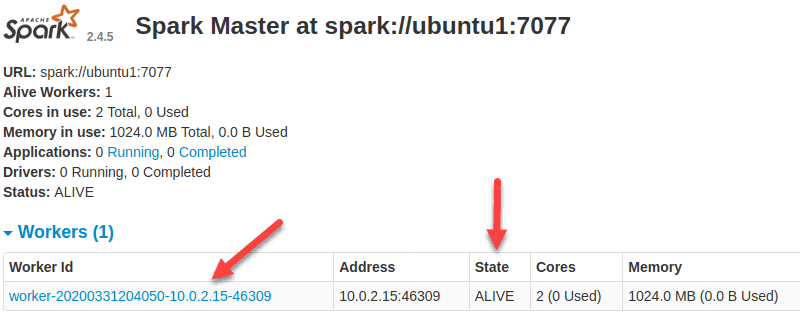

Now that a worker is up and running, if you reload Spark Master’s Web UI, you should see it on the list:

After that, finish the configuration and start the master and slave server, test if the Spark shell works:

spark-shell

Congratulations! You have successfully installed Apache Spark. Thanks for using this tutorial for installing Apache Spark on Ubuntu 20.04 (Focal Fossa) system. For additional help or useful information, we recommend you check the official Apache Spark website.