How To Install Open WebUI on Debian 12

Open WebUI is a powerful, open-source web interface designed for running large language models (LLMs), particularly those supported by Ollama. It provides an intuitive, user-friendly platform for interacting with AI models, making advanced language processing capabilities accessible to users without requiring extensive technical knowledge. This comprehensive guide will walk you through the complete process of installing Open WebUI on Debian 12, covering multiple installation methods, configuration options, integration with Ollama, troubleshooting common issues, and securing your installation.

Understanding Open WebUI and Its Benefits

Open WebUI serves as a graphical user interface for interacting with various language models, particularly those available through Ollama. It provides a ChatGPT-like experience but running entirely on your local machine. The interface allows for easy model switching, conversation management, and even supports multimodal models like LLaVA. By installing Open WebUI on your Debian 12 system, you gain access to a sophisticated AI interaction platform that remains under your control and doesn’t require sharing your data with third-party services.

The platform’s open-source nature means it’s constantly evolving with community contributions, offering improved features and capabilities with each update. Whether you’re a developer looking to experiment with AI models, a researcher analyzing language processing, or simply an enthusiast interested in exploring AI capabilities, Open WebUI provides an accessible entry point into the world of large language models.

Prerequisites for Installation

Hardware Requirements

Before proceeding with the installation of Open WebUI on Debian 12, ensure your system meets the minimum hardware requirements. While the exact specifications may vary depending on the language models you intend to run, a baseline configuration includes:

- A modern multi-core CPU (4+ cores recommended for smooth operation)

- At least 8GB of RAM (16GB or more recommended for larger models)

- Sufficient storage space: minimum 10GB free space (more if you plan to download multiple language models)

- GPU acceleration (optional but recommended for better performance, especially with larger models)

- For users planning to run resource-intensive models, a system with dedicated GPU resources will significantly improve performance. Open WebUI supports CUDA acceleration when properly configured with compatible Nvidia GPUs.

Software Requirements

The software prerequisites for installing Open WebUI on Debian 12 include:

- Debian 12 (Bookworm) operating system

- Python 3.11 (specifically recommended for compatibility)

- Docker (if using the Docker installation method)

- pip (Python package manager)

- Git (for accessing repositories)

- Basic command-line knowledge

- sudo privileges on your Debian system

It’s worth noting that while other Python versions might work, Python 3.11 is specifically recommended by the Open WebUI developers to avoid compatibility issues. If you’re using Arch Linux or similar distributions that might ship with newer Python versions (like Python 3.13), you may encounter compatibility issues.

Preparing Your Debian 12 System

Before installation, ensure your Debian 12 system is updated with the latest packages and security updates. Open a terminal and run:

sudo apt update

sudo apt upgradeAdditionally, install the necessary dependencies that will be required during the installation process:

sudo apt install curl wget git python3-pip python3-venvFor non-Gnome desktop environments, you’ll also need to install gnome-terminal:

sudo apt install gnome-terminalThese preparatory steps ensure your system has all the necessary components to successfully install and run Open WebUI.

Setting Up a Python Virtual Environment

Why Use a Virtual Environment

Using a Python virtual environment for installing Open WebUI offers several significant advantages. Virtual environments create isolated spaces where Python packages can be installed without interfering with your system’s global Python installation. This isolation prevents dependency conflicts, particularly important when different projects require different package versions.

For Open WebUI specifically, a virtual environment ensures that its dependencies don’t conflict with other Python applications on your system. It also makes updating or uninstalling Open WebUI cleaner, as all its components are contained within the virtual environment. Furthermore, if something goes wrong during installation or configuration, you can simply delete the virtual environment and start fresh without affecting your system’s Python installation.

Creating a Python Virtual Environment

To create a virtual environment for Open WebUI installation, follow these steps:

1. First, create a directory for your project:

mkdir -p ~/Projects/openwebui

cd ~/Projects/openwebui2. Create a new Python virtual environment:

python3 -m venv webui-env3. Activate the virtual environment:

source webui-env/bin/activateAfter activation, your terminal prompt should change to indicate you’re now working within the virtual environment. This environment will contain all the necessary packages for Open WebUI while keeping them separate from your system’s global Python installation.

When you’re finished working with Open WebUI, you can deactivate the virtual environment by simply typing:

deactivateThis returns you to your system’s global Python environment. Remember to reactivate the virtual environment whenever you want to use or update Open WebUI.

Installing Open WebUI

Method 1: Using Pip

Installing Open WebUI using pip is straightforward once you have your virtual environment set up. This method is particularly useful for users who prefer working directly with Python packages or need more control over the installation process.

With your virtual environment activated, install Open WebUI using pip:

pip install open-webuiThis command downloads and installs Open WebUI and all its dependencies. Depending on your internet connection speed, this process might take several minutes to complete.

After installation is complete, you can start Open WebUI with the following command:

open-webui serveThis starts the Open WebUI server, which you can access by opening your web browser and navigating to:

http://localhost:8080On your first visit, you’ll need to create an administrator account by providing a name, email, and password. This account will be used to manage your Open WebUI installation and access all its features.

The pip installation method gives you more control over where and how Open WebUI is installed. It’s particularly useful if you’re already familiar with Python development or prefer not to use Docker containers. However, it does require a bit more manual setup and management of dependencies.

Method 2: Using Docker

Installing Open WebUI using Docker provides a more containerized and isolated approach. This method is generally considered easier for beginners as it handles many of the dependency issues automatically and provides a more consistent environment.

Installing Docker on Debian 12

If you haven’t already installed Docker on your Debian 12 system, follow these steps:

1. Update your package index:

sudo apt update2. Install packages to allow apt to use a repository over HTTPS:

sudo apt install apt-transport-https ca-certificates curl gnupg2 lsb-release software-properties-common3. Add Docker’s official GPG key:

curl -fsSL https://download.docker.com/linux/debian/gpg | sudo gpg --dearmor -o /usr/share/keyrings/docker-archive-keyring.gpg4. Set up the stable repository:

echo "deb [arch=amd64 signed-by=/usr/share/keyrings/docker-archive-keyring.gpg] https://download.docker.com/linux/debian $(lsb_release -cs) stable" | sudo tee /etc/apt/sources.list.d/docker.list > /dev/null5. Update apt and install Docker:

sudo apt update

sudo apt install docker-ce docker-ce-cli containerd.io6. Verify Docker installation:

sudo docker run hello-worldAlternatively, you can use the convenience script provided by Docker:

curl -fsSL https://get.docker.com -o get-docker.sh

sudo sh get-docker.shRunning Open WebUI in Docker

Once Docker is installed, you can run Open WebUI using one of the following commands, depending on your setup:

For a standard setup with CPU only:

docker run -d -p 3000:8080 -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:mainFor a setup with Ollama included:

docker run -d -p 3000:8080 -v ollama:/root/.ollama -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:ollamaFor GPU acceleration (if you have compatible Nvidia hardware):

docker run -d -p 3000:8080 --gpus=all -v ollama:/root/.ollama -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:ollamaAfter running one of these commands, Docker will download the necessary image and start the Open WebUI container. You can then access the interface by opening your web browser and navigating to:

http://localhost:3000The Docker method is generally more straightforward and ensures that all dependencies are properly managed within the container. It also makes updating Open WebUI as simple as pulling the latest image and restarting the container.

Configuring Open WebUI

Initial Setup and Access

After successful installation, it’s time to configure Open WebUI for first use. Open your web browser and navigate to the appropriate address based on your installation method:

- For pip installation:

http://localhost:8080 - For Docker installation:

http://localhost:3000

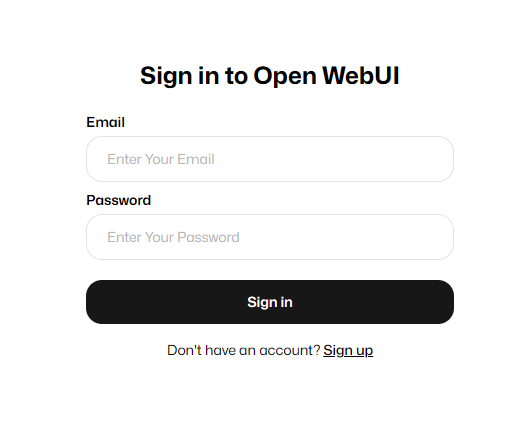

On your first visit, you’ll be presented with a setup page where you’ll need to create an administrator account. Fill in the required information, including your name, email address, and a secure password. This account will serve as the primary administrator for your Open WebUI installation.

Once you’ve created your administrator account and logged in, you’ll be presented with the main interface of Open WebUI. This interface resembles popular chat applications, with a sidebar for navigation and a main chat area where you’ll interact with language models.

Customizing Your Environment

After logging in, take some time to explore the settings and customization options available in Open WebUI. From the main interface, you can access the settings menu to configure various aspects of your installation:

- Interface Settings: Customize the appearance of the interface, including theme options (light/dark mode), chat behavior, and display preferences.

- Model Settings: Configure which models are available and set default models for different types of interactions.

- API Connections: If you plan to use external API services alongside your local models, you can configure these connections here.

- User Management: As an administrator, you can create additional user accounts if you plan to share your Open WebUI installation with others.

These configuration options allow you to tailor Open WebUI to your specific needs and preferences, creating a personalized AI interaction environment.

Integrating Ollama with Open WebUI

Understanding Ollama

Ollama is a tool designed to simplify running large language models locally on your machine. It handles the downloading, management, and execution of various open-source models like Llama, Mistral, and others. When integrated with Open WebUI, Ollama provides the backend model capabilities, while Open WebUI serves as the user-friendly interface for interacting with these models.

The combination of Ollama and Open WebUI creates a powerful, fully local AI system that doesn’t rely on external cloud services for processing. This ensures privacy and control over your data while still providing advanced AI capabilities.

Installing Ollama

If you haven’t installed Ollama or if you chose the non-Ollama Docker image for Open WebUI, you’ll need to install Ollama separately. Here’s how to install Ollama on Debian 12:

1. Download the Ollama installation script:

curl -fsSL https://ollama.com/install.sh -o install-ollama.sh2. Make the script executable:

chmod +x install-ollama.sh3. Run the installation script:

sudo ./install-ollama.sh4. After installation, start the Ollama service:

ollama serveThis command starts the Ollama service, which will listen for requests on port 11434 by default.

Configuring Open WebUI for Ollama

If you installed Open WebUI using the Docker method with the `:ollama` tag, Ollama is already included and properly configured. However, if you used the pip installation method or the standard Docker image, you’ll need to configure Open WebUI to connect to your Ollama installation.

For pip installations, you can set the Ollama base URL by creating or editing the `.env` file in your Open WebUI directory:

echo "OLLAMA_BASE_URL=http://localhost:11434" > .envFor Docker installations without bundled Ollama, you can specify the Ollama URL when starting the container:

docker run -d -p 3000:8080 -v open-webui:/app/backend/data -e OLLAMA_BASE_URL=http://host.docker.internal:11434 --name open-webui --restart always ghcr.io/open-webui/open-webui:mainAfter configuring the connection to Ollama, restart Open WebUI for the changes to take effect. You should now be able to see and use any models you’ve downloaded with Ollama directly from the Open WebUI interface.

Adding and Using Models

With Ollama integrated, you can now add language models to use with Open WebUI. To download a model, you can use the Ollama command line:

ollama pull mistralReplace “mistral” with the name of the model you wish to download. Common models include:

- llama3

- mistral

- gemma

- phi

- llava (for multimodal capabilities)

Once downloaded, these models will appear in the Open WebUI interface, allowing you to select different models for different conversations or tasks. Each model has its own strengths and characteristics, so you might find that certain models perform better for specific types of queries or tasks.

Troubleshooting Common Issues

Connection Problems

One common issue users encounter is connection problems between Open WebUI and Ollama. If you find that Open WebUI isn’t connecting to Ollama properly, check the following:

1. Ensure Ollama is running: Verify that the Ollama service is active by running:

ps aux | grep ollama2. Check port availability: Make sure port 11434 (Ollama’s default port) is not blocked by a firewall:

sudo ufw status3. Verify URL configuration: Ensure the OLLAMA_BASE_URL is correctly set to point to your Ollama installation.

4. Network issues: If running Ollama on a different machine, ensure network connectivity between the Open WebUI server and the Ollama server.

Blank Screen or Login Issues

Some users report seeing only a blank screen with the Open WebUI logo after installation. This issue often occurs when Open WebUI is configured to connect to a remote Ollama server that is offline or unreachable. To resolve this:

- Ensure the remote server is online and accessible

- Check network connectivity between the servers

- Verify that Ollama is running on the remote server

- Consider using a local Ollama installation instead of a remote one if connectivity is unreliable

Docker-Related Issues

If you’re using the Docker installation method and encountering issues:

1. Container won’t start: Check if there are port conflicts by running:

sudo netstat -tuln | grep 30002. Volume mounting issues: Ensure the Docker volumes are properly created:

docker volume ls | grep open-webui3. Permission problems: Make sure your user has the necessary permissions to use Docker:

sudo usermod -aG docker $USERRemember to log out and back in for this change to take effect.

Securing Open WebUI

Basic Security Considerations

Since Open WebUI provides access to powerful language models that could potentially generate sensitive content, it’s important to implement proper security measures, especially if you’re exposing the service beyond your local network:

- Use strong passwords: Ensure your administrator account uses a strong, unique password.

- Implement user accounts: Create individual accounts for different users rather than sharing a single account.

- Regular updates: Keep both Open WebUI and Ollama updated to the latest versions to benefit from security patches and improvements.

- Run as non-root: When possible, run both Open WebUI and Ollama as non-root users to limit potential security impacts.

Setting Up HTTPS

For enhanced security, especially when accessing Open WebUI over the internet, consider setting up HTTPS. Here’s a simplified approach using a self-signed certificate:

1. Install OpenSSL if not already installed:

sudo apt install openssl2. Generate a self-signed certificate:

sudo openssl req -x509 -nodes -days 365 -newkey rsa:2048 -keyout /etc/ssl/private/nginx-selfsigned.key -out /etc/ssl/certs/nginx-selfsigned.crt3. Set up Nginx as a reverse proxy with HTTPS support:

- Install Nginx:

sudo apt install nginx - Configure Nginx to proxy requests to Open WebUI while handling SSL/TLS encryption

- Restart Nginx to apply the changes

This setup ensures that all traffic between your browser and Open WebUI is encrypted, protecting sensitive information and model interactions.

Firewall Configuration

Configure your firewall to allow only necessary connections to Open WebUI. For a basic UFW (Uncomplicated Firewall) setup:

1. Check the current UFW status:

sudo ufw status2. Allow SSH connections (important to maintain access to your server):

sudo ufw allow ssh3. Allow HTTPS connections if you’re using a reverse proxy:

sudo ufw allow https4. If not using a reverse proxy, allow the specific port Open WebUI is running on:

sudo ufw allow 3000/tcp # For Docker installationsor

sudo ufw allow 8080/tcp # For pip installations5. Enable the firewall:

sudo ufw enableThis configuration ensures that only authorized ports are accessible, reducing the attack surface of your server.

Congratulations! You have successfully installed Open WebUI. Thanks for using this tutorial for installing Open WebUI on Debian 12 system. For additional help or useful information, we recommend you check the Open WebUI website.