How To Install Stable Diffusion on Linux Mint 22

Linux Mint provides an excellent platform for running AI applications like Stable Diffusion, offering stability, performance, and user-friendly configuration options. In this comprehensive guide, you’ll learn how to install and configure Stable Diffusion on Linux Mint 22, enabling you to generate impressive AI images locally on your machine. Whether you’re a digital artist, developer, or AI enthusiast, this step-by-step tutorial will help you harness the power of open-source AI image generation.

Understanding Stable Diffusion and System Requirements

Stable Diffusion is a cutting-edge generative AI model that transforms text descriptions into detailed images. This powerful open-source tool uses diffusion technology to progressively convert random noise into coherent visuals based on your prompts.

Hardware Requirements:

- CPU: Multi-core processor (Intel or AMD)

- RAM: Minimum 8GB, recommended 16GB for optimal performance

- Storage: At least 10GB free space for models and generated content

- GPU: NVIDIA GPU with CUDA support strongly recommended

While Stable Diffusion can run on CPU-only setups, having a compatible NVIDIA GPU dramatically improves performance and generation speed. The more VRAM your GPU has, the faster your image generation will be and the larger the images you can create.

Software Prerequisites:

- Python 3.8 or higher

- Git for repository management

- pip (Python package installer)

- Python virtual environment tools

Preparing Your Linux Mint System

The foundation of a successful Stable Diffusion installation is a properly prepared system. Let’s start with updating your Linux Mint installation.

Updating Your System

Open your terminal and run:

sudo apt update

sudo apt upgrade -yThis ensures your system packages are up-to-date before installing new software. Taking this step helps avoid compatibility issues during installation.

Installing Essential Dependencies

Next, install the required dependencies with:

sudo apt install python3 python3-pip git python3-venv curl -yThis command installs Python, pip, Git, and the Python virtual environment tool needed for Stable Diffusion. Verify your installations with:

python3 --version

pip3 --version

git --versionSetting Up GPU Drivers (For NVIDIA Users)

If you have an NVIDIA GPU, proper drivers are essential for CUDA support:

- Open the Driver Manager from the system menu

- Select the recommended proprietary NVIDIA driver

- Apply changes and restart your system

- Verify installation with:

nvidia-smi

The output should display your GPU information and driver version, confirming proper installation.

Method 1: Installing with Automatic1111 WebUI

The most popular and user-friendly way to install Stable Diffusion is through the Automatic1111 WebUI, which provides a comprehensive browser interface for generating and manipulating images.

Downloading the Repository

Clone the WebUI repository:

git clone https://github.com/AUTOMATIC1111/stable-diffusion-webui.git

cd stable-diffusion-webuiThis creates a local copy of the repository on your machine.

Running the Installation Script

Execute the included installation script:

bash webui.shDuring the first run, this script:

- Creates a Python virtual environment

- Installs all required dependencies

- Downloads the necessary model files (if you select default options)

- Launches the web server

If you encounter the error “Cannot activate python venv, aborting…”, ensure you’ve installed python3-venv properly, or try recreating the virtual environment manually:

python3 -m venv venv

source venv/bin/activateThen run the installation script again.

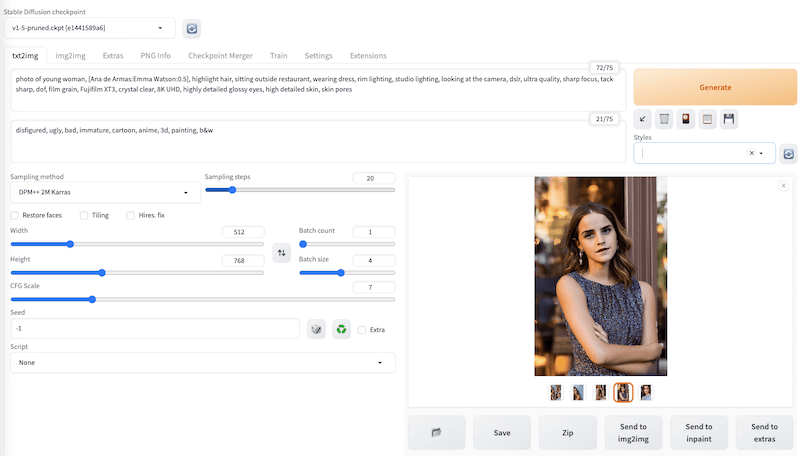

First-time Setup

Once installation completes, the script launches a web server and opens your browser to the WebUI interface (typically at http://127.0.0.1:7860). If the browser doesn’t open automatically, manually navigate to the address shown in your terminal.

The interface includes several tabs:

- Text-to-Image: Generate images from text descriptions

- Image-to-Image: Transform existing images

- Extras: Additional tools and utilities

- Settings: Configure parameters and options

Method 2: Manual Installation Process

For more control over the installation process, you can install Stable Diffusion manually.

Cloning the Official Repository

Start by cloning the official Stable Diffusion repository:

git clone https://github.com/CompVis/stable-diffusion.git

cd stable-diffusionCreating a Python Virtual Environment

Create and activate a dedicated virtual environment:

python3 -m venv venv

source venv/bin/activateYou should see “(venv)” at the beginning of your terminal prompt, indicating the environment is active.

Installing Dependencies via pip

Install required Python packages:

pip install -r requirements.txtThis installs all dependencies specified in the requirements.txt file, including PyTorch, transformers, and other necessary libraries.

Setting Up CUDA Support for GPU Acceleration

For NVIDIA GPU users, enabling CUDA support significantly speeds up image generation.

CUDA Toolkit Installation

Install the CUDA toolkit compatible with Linux Mint 22:

sudo apt install nvidia-cuda-toolkit -yVerify installation with:

nvcc --versionConfiguring PyTorch with CUDA

Ensure PyTorch is installed with CUDA support:

pip install torch torchvision torchaudio --extra-index-url https://download.pytorch.org/whl/cu117Test if PyTorch recognizes your GPU:

python3 -c "import torch; print(torch.cuda.is_available())"If this returns “True”, PyTorch is correctly configured to use your GPU.

Managing Models and Weights

Understanding Model Options

Stable Diffusion comes in various versions with different capabilities:

- SD 1.5: The classic model, well-balanced between quality and resource usage

- SD 2.x: Improved versions with better image quality

- SD XL: Larger models with enhanced capabilities but higher resource requirements

- Specialized models: Fine-tuned for specific styles or domains

Downloading Pre-trained Models

For the WebUI installation, models are typically stored in the models/Stable-diffusion directory. The installation script may download a basic model automatically, but you can add more from:

- Hugging Face (https://huggingface.co/) – requires creating an account

- Civitai (https://civitai.com/) – community models and fine-tunes

Place downloaded model files in the appropriate directory within your installation folder.

Running Your First Generation

Basic Usage in WebUI

Using the Automatic1111 WebUI:

- Navigate to the Text-to-Image tab

- Enter a prompt (e.g., “A fantasy landscape with floating islands and waterfalls”)

- Adjust settings like width, height, and sampling steps

- Click “Generate”

Experiment with different prompts and settings to see how they affect the output. The quality of results depends significantly on your prompt crafting skills.

Command Line Generation (Manual Installation)

If you’ve done a manual installation, you can generate images using:

python scripts/txt2img.py --prompt "A fantasy landscape" --plmsKey parameters include:

--prompt: Text description of the desired image--n_samples: Number of images to generate--n_iter: Number of iterations--plms: Uses the PLMS sampler

Web UI Configuration and Optimization

Advanced Launch Options

You can customize the WebUI startup with various options:

bash webui.sh --listen --xformersCommon options include:

--listen: Makes the WebUI accessible from other devices on your network--xformers: Enables memory-efficient attention for better performance--medvramor--lowvram: Reduces VRAM usage for computers with limited resources

Adding Extensions and Plugins

The WebUI supports numerous extensions that enhance functionality:

- Navigate to the “Extensions” tab

- Select “Available” to browse available extensions

- Install desired extensions with a simple click

Popular extensions include ControlNet for precise image control, additional networks for LoRA support, and various upscalers.

Troubleshooting Common Issues

Dependency Errors

If you encounter dependency-related errors:

- Ensure your system is fully updated

- Reinstall problematic packages

- Check for version conflicts between packages

CUDA and GPU Problems

For GPU-related issues:

- Verify NVIDIA drivers are properly installed

- Check CUDA toolkit compatibility with PyTorch

- For “out of memory” errors, reduce image size or batch count

Virtual Environment Problems

If you see “Cannot activate python venv, aborting…”:

- Ensure python3-venv is installed

- Delete the existing venv directory and recreate it

- Check file permissions with:

chown -R $USER:$USER /path/to/stable-diffusion-webui

Updates and Maintenance

Keeping Your Installation Current

To update the WebUI:

cd stable-diffusion-webui

git pull

bash webui.shRegular updates ensure you benefit from new features and bug fixes.

Performance Optimization with zswap

To improve performance, especially on systems with limited RAM, consider enabling zswap – a Linux kernel feature that provides a compressed RAM cache for swap pages:

- Edit the GRUB configuration file

- Add zswap parameters to the kernel command line

- Update GRUB and reboot

This can significantly improve responsiveness when running memory-intensive applications like Stable Diffusion.

Congratulations! You have successfully installed Stable Diffusion. Thanks for using this tutorial for installing Stable Diffusion on the Linux Mint 22 system. For additional help or useful information, we recommend you check the official Stable Diffusion website.