How To Install Uptime Kuma on Manjaro

Modern website and service monitoring has become essential for maintaining reliable digital infrastructure. Uptime Kuma stands out as a powerful, open-source monitoring solution that delivers comprehensive uptime tracking without the recurring costs of commercial alternatives.

This comprehensive guide walks you through installing Uptime Kuma on Manjaro Linux, covering multiple installation methods to suit different deployment scenarios. Whether you’re a system administrator managing critical services or a developer monitoring personal projects, you’ll discover the most effective approach for your specific needs.

Manjaro’s Arch-based foundation provides excellent compatibility with modern development tools, making it an ideal platform for self-hosted monitoring solutions. The rolling release model ensures access to the latest packages while maintaining system stability through careful testing.

Understanding Uptime Kuma Features and Capabilities

Core Monitoring Features

Uptime Kuma excels at monitoring diverse infrastructure components through its extensive protocol support. The platform monitors HTTP and HTTPS websites with advanced keyword checking capabilities, ensuring your content remains accessible and displays correctly.

TCP port monitoring provides deep insights into service availability, while ICMP ping tests verify basic network connectivity. DNS record monitoring tracks critical domain configurations, preventing service disruptions from DNS changes.

SSL certificate monitoring alerts you before expiration dates, eliminating surprise certificate failures. Docker container health checks integrate seamlessly with containerized environments, while database connectivity monitoring ensures data layer reliability.

Steam game server monitoring caters to gaming communities, tracking server performance and player capacity. These diverse monitoring capabilities make Uptime Kuma suitable for everything from personal websites to complex enterprise infrastructures.

Dashboard and User Interface

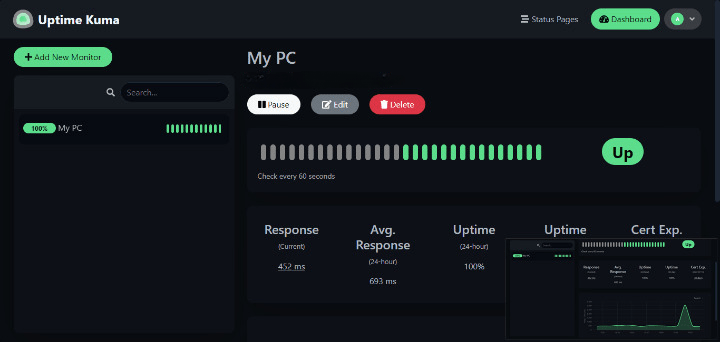

The modern, reactive web interface provides real-time status visualization with intuitive color coding and responsive design. Status dashboards display uptime percentages, response times, and service availability at a glance.

Historical response time charts reveal performance trends over configurable time periods. SSL certificate expiration warnings provide advance notice of upcoming renewals, while multi-language support accommodates international teams.

Two-factor authentication strengthens security without compromising usability. The clean interface scales effectively from single-service monitoring to complex multi-service deployments.

Notification and Alert System

Uptime Kuma leverages the powerful Apprise library to support over 78 notification services, ensuring alerts reach you through your preferred communication channels. Popular integrations include Telegram, Slack, Discord, email SMTP, webhook endpoints, and mobile push notifications.

Custom alert rules allow fine-tuned notification timing, preventing alert fatigue while ensuring critical issues receive immediate attention. Notification scheduling accommodates different time zones and maintenance windows.

Public status pages provide stakeholder communication without requiring separate status page services. These pages display real-time service status with customizable branding and messaging.

System Requirements and Prerequisites

Hardware Requirements

Minimum system specifications include 1 vCPU, 1GB RAM, and 20GB storage for basic monitoring deployments. These requirements support monitoring up to 50 services with standard check intervals.

Recommended specifications feature 2 vCPU, 2GB RAM, and faster storage for improved performance. Larger deployments benefit from additional memory for database operations and concurrent monitoring processes.

Storage requirements scale with monitoring frequency and log retention policies. High-frequency monitoring generates more data, requiring additional disk space for historical records and performance metrics.

Performance scaling depends on the number of monitoring probes and check intervals. Each monitor consumes CPU cycles and memory, with network-intensive checks requiring additional bandwidth.

Software Dependencies

Manjaro Linux compatibility stems from its Arch-based foundation, ensuring access to current packages and development tools. The rolling release model provides timely security updates and feature enhancements.

Node.js version support encompasses versions 14, 16, 18, and 20.4, with version 18 recommended for optimal performance and security. npm version 9 or higher ensures compatibility with modern package management features.

Git facilitates repository cloning and version control operations. Docker and Docker Compose enable containerized deployments with simplified dependency management and scalability.

pm2 process manager provides production-grade process management for native installations, offering automatic restarts, log management, and performance monitoring.

Optional reverse proxy configuration through Nginx or Apache enables SSL termination, load balancing, and enhanced security for production deployments.

Installation Method 1: Docker Installation (Recommended)

Installing Docker on Manjaro

Docker installation on Manjaro begins with updating the package database to ensure access to the latest versions:

sudo pacman -SyuInstall Docker and Docker Compose through the official repositories:

sudo pacman -S docker docker-composeEnable the Docker service for automatic startup:

sudo systemctl enable docker

sudo systemctl start dockerAdd your user account to the docker group to avoid requiring sudo for Docker commands:

sudo usermod -aG docker $USERLog out and back in to activate group membership changes. Verify Docker installation:

docker --version

docker-compose --versionTest Docker functionality with a simple container:

docker run hello-worldDocker Run Method

The single-command Docker installation provides the fastest deployment path for testing and development environments:

docker run -d --restart=unless-stopped -p 3001:3001 -v uptime-kuma:/app/data --name uptime-kuma louislam/uptime-kuma:1This command creates a persistent volume named uptime-kuma for data storage, maps port 3001 to the host system, and configures automatic restart policies.

Volume persistence ensures monitoring data survives container restarts and updates. The named volume approach simplifies backup procedures and data migration between hosts.

Port configuration uses the default port 3001, which can be modified by changing the first port number in the -p parameter. For example, -p 8080:3001 exposes the service on port 8080.

Security considerations include file system locks and container isolation. The restart policy unless-stopped ensures the service remains available after system reboots while allowing manual stops.

Monitor container status and logs:

docker ps

docker logs uptime-kumaDocker Compose Method

Docker Compose deployment offers enhanced configuration management and service orchestration capabilities. Create a dedicated directory for the deployment:

mkdir ~/uptime-kuma

cd ~/uptime-kumaCreate a docker-compose.yml file with the following configuration:

version: '3.3'

services:

uptime-kuma:

image: louislam/uptime-kuma:1

container_name: uptime-kuma

volumes:

- ./uptime-kuma-data:/app/data

ports:

- 3001:3001

restart: unless-stopped

security_opt:

- no-new-privileges:true

environment:

- UPTIME_KUMA_DISABLE_FRAME_SAMEORIGIN=0Service configuration includes security optimizations like no-new-privileges and environment variables for enhanced security controls.

Volume mapping uses a local directory for easier backup management and direct file access when needed.

Network configuration can be extended with custom networks for complex deployments involving multiple services.

Start the services:

docker-compose up -dManaging containers with Docker Compose commands:

# View service status

docker-compose ps

# View logs

docker-compose logs -f

# Stop services

docker-compose down

# Update to latest version

docker-compose pull

docker-compose up -dAdvantages over single container include simplified configuration management, easier service updates, and better integration with multi-service deployments.

Installation Method 2: Native Installation

Installing Dependencies on Manjaro

Native installation provides maximum performance and direct system integration. Begin by installing Node.js and npm:

sudo pacman -S nodejs npm git base-develVerify Node.js version compatibility:

node --version

npm --versionUpdate npm to the latest version:

sudo npm install -g npm@latestInstall pm2 process manager globally for production process management:

sudo npm install -g pm2Development tools installation includes base-devel package group for compiling native dependencies during the build process.

Verify all dependencies:

which node npm git pm2Cloning and Building Uptime Kuma

Repository cloning requires Git access to the official Uptime Kuma repository:

git clone https://github.com/louislam/uptime-kuma.git

cd uptime-kumaNavigate to project directory and examine the project structure:

ls -la

cat README.mdRun the setup command to install dependencies and build the application:

npm run setupUnderstanding the build process involves downloading Node.js dependencies, compiling TypeScript sources, and building the web interface. This process may take several minutes depending on system performance.

Handle deprecation warnings by reviewing npm output for security advisories. Most warnings are informational and don’t affect application functionality.

Verify successful installation by checking for the server directory and built assets:

ls -la server/

ls -la dist/Running Uptime Kuma

Testing the installation begins with a direct Node.js execution:

node server/server.jsThis command starts Uptime Kuma in development mode with console output. Press Ctrl+C to stop the service after verifying functionality.

Production deployment with pm2 provides process management, automatic restarts, and log handling:

pm2 start server/server.js --name uptime-kumapm2 startup configuration ensures the service starts automatically after system reboots:

pm2 startup

pm2 saveFollow the displayed instructions to complete startup configuration.

Monitor processes with pm2 commands:

# View process status

pm2 status

# Monitor real-time logs

pm2 logs uptime-kuma

# Monitor system resources

pm2 monitLog management and rotation prevents disk space issues:

pm2 install pm2-logrotate

pm2 set pm2-logrotate:max_size 10M

pm2 set pm2-logrotate:retain 7Creating a Systemd Service

Custom systemd service provides system-level service management independent of user sessions. Create the service file:

sudo nano /etc/systemd/system/uptime-kuma.serviceAdd the following configuration:

[Unit]

Description=Uptime Kuma

After=network.target

[Service]

Type=simple

User=uptime-kuma

WorkingDirectory=/opt/uptime-kuma

ExecStart=/usr/bin/node server/server.js

Restart=always

RestartSec=10

Environment=NODE_ENV=production

[Install]

WantedBy=multi-user.targetCreate a dedicated user for security:

sudo useradd --system --shell /bin/false uptime-kuma

sudo chown -R uptime-kuma:uptime-kuma /opt/uptime-kumaEnable and start the service:

sudo systemctl daemon-reload

sudo systemctl enable uptime-kuma

sudo systemctl start uptime-kumaManage the service with standard systemctl commands:

sudo systemctl status uptime-kuma

sudo systemctl restart uptime-kuma

sudo systemctl stop uptime-kumaInstallation Method 3: AUR Package

Using AUR Helper

AUR (Arch User Repository) provides community-maintained packages for Arch-based distributions. Install an AUR helper like yay:

sudo pacman -S --needed git base-devel

git clone https://aur.archlinux.org/yay.git

cd yay

makepkg -siSearch for Uptime Kuma packages:

yay -Ss uptime-kumaInstall from AUR with automatic dependency resolution:

yay -S uptime-kumaAlternative AUR helper paru offers similar functionality:

sudo pacman -S paru

paru -S uptime-kumaAUR package maintenance depends on community maintainers. Check package comments and votes before installation to assess package quality and maintenance status.

Automatic updates integrate with system package management:

yay -SyuPost-Installation Configuration

AUR package-specific configuration files typically reside in /etc/uptime-kuma/:

ls -la /etc/uptime-kuma/Environment file setup configures service parameters:

sudo nano /etc/uptime-kuma/uptime-kuma.envAdd configuration variables:

NODE_ENV=production

UPTIME_KUMA_HOST=0.0.0.0

UPTIME_KUMA_PORT=3001Service management with systemd follows standard patterns:

sudo systemctl enable uptime-kuma

sudo systemctl start uptime-kuma

sudo systemctl status uptime-kumaAutomatic backup features may be included in AUR packages. Review package documentation for backup procedures and upgrade policies.

Initial Configuration and Setup

First-Time Setup

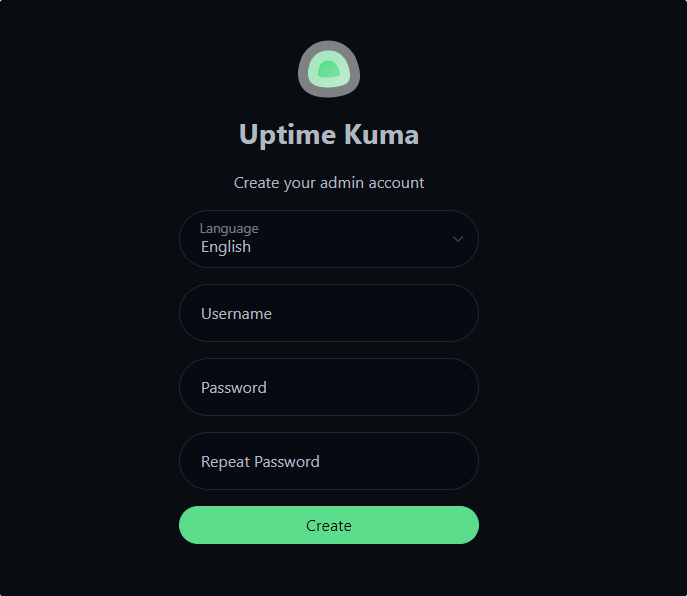

Access the web interface by opening a browser and navigating to http://localhost:3001. The initial setup wizard guides you through administrator account creation.

Create admin account with a strong password meeting security requirements. Choose a username that doesn’t reveal administrative privileges to potential attackers.

Two-factor authentication setup enhances security through TOTP applications like Google Authenticator or Authy. Scan the QR code or manually enter the secret key into your authenticator app.

Basic security settings include session timeout configuration and login attempt limits. These settings balance security with usability based on your deployment environment.

Dashboard layout understanding reveals the main navigation areas, monitor status indicators, and notification panels. Familiarize yourself with the interface before adding monitors.

Basic Configuration

Database settings default to SQLite for simplicity, but PostgreSQL and MySQL are supported for larger deployments. SQLite suffices for most use cases with hundreds of monitors.

SMTP email configuration enables email notifications. Configure SMTP server details, authentication credentials, and test email delivery:

SMTP Host: smtp.gmail.com

SMTP Port: 587

Security: STARTTLS

Username: your-email@gmail.com

Password: app-passwordDefault monitoring intervals balance responsiveness with resource usage. Start with 60-second intervals and adjust based on performance requirements and service criticality.

Time zone configuration ensures accurate timestamps in logs and notifications. Select your local time zone from the dropdown menu in settings.

Localization settings support multiple languages for international teams. Language changes apply immediately without requiring service restarts.

Adding Your First Monitor

Create HTTP(s) website monitor by clicking the “Add New Monitor” button. Enter the website URL, monitoring interval, and timeout values.

Monitor configuration options include:

- Friendly Name: Descriptive label for easy identification

- URL: Full URL including protocol (http:// or https://)

- Heartbeat Interval: Check frequency in seconds

- Retries: Number of retry attempts before marking as down

- Timeout: Maximum wait time for response

Notification rules setup determines when and how alerts are sent. Create notification groups for different service categories or stakeholder groups.

Monitor status indicators use color coding:

- Green: Service is up and responding normally

- Red: Service is down or not responding

- Yellow: Warning state or recent recovery

- Gray: Monitor is paused or pending first check

Test monitor functionality by temporarily blocking the monitored service or modifying the URL to trigger a down state. Verify that notifications are sent correctly and status updates appropriately.

Security Hardening and Best Practices

Network Security

Firewall configuration restricts access to the monitoring interface. Configure UFW (Uncomplicated Firewall) to allow only necessary connections:

sudo ufw enable

sudo ufw allow 22/tcp # SSH access

sudo ufw allow 3001/tcp # Uptime Kuma (if direct access needed)Reverse proxy setup with Nginx provides SSL termination and enhanced security. Install and configure Nginx:

sudo pacman -S nginx

sudo systemctl enable nginxCreate an Nginx virtual host configuration:

server {

listen 80;

server_name monitoring.yourdomain.com;

return 301 https://$server_name$request_uri;

}

server {

listen 443 ssl http2;

server_name monitoring.yourdomain.com;

ssl_certificate /path/to/ssl/cert.pem;

ssl_certificate_key /path/to/ssl/private.key;

location / {

proxy_pass http://127.0.0.1:3001;

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection 'upgrade';

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_cache_bypass $http_upgrade;

}

}SSL/TLS certificate configuration using Let’s Encrypt provides free SSL certificates:

sudo pacman -S certbot certbot-nginx

sudo certbot --nginx -d monitoring.yourdomain.comIP filtering restricts access to specific networks or addresses. Add location blocks to Nginx configuration:

location / {

allow 192.168.1.0/24;

allow 10.0.0.0/8;

deny all;

proxy_pass http://127.0.0.1:3001;

# ... other proxy settings

}Application Security

User account management follows the principle of least privilege. Create separate accounts for different administrative functions and avoid sharing credentials.

Two-factor authentication should be mandatory for all administrative accounts. Regularly rotate TOTP secrets and maintain backup codes securely.

Regular security updates keep the system protected against known vulnerabilities. Enable automatic security updates:

sudo systemctl enable systemd-timesyncdCreate update automation with systemd timers:

# Create update script

sudo nano /usr/local/bin/update-uptime-kuma.sh

#!/bin/bash

cd /path/to/uptime-kuma

git pull

npm run setup

pm2 restart uptime-kumaBackup and recovery procedures protect against data loss and corruption. Create automated backups of configuration data:

# Backup script

#!/bin/bash

BACKUP_DIR="/backup/uptime-kuma"

DATA_DIR="/app/data" # Docker volume path

DATE=$(date +%Y%m%d_%H%M%S)

mkdir -p $BACKUP_DIR

tar -czf $BACKUP_DIR/uptime-kuma-$DATE.tar.gz $DATA_DIR

find $BACKUP_DIR -name "*.tar.gz" -mtime +30 -deletePerformance Optimization

Database optimization for large deployments involves configuring appropriate connection pools and query optimization. Monitor database size and implement cleanup procedures for old monitoring data.

Resource monitoring helps identify performance bottlenecks. Use system monitoring tools to track CPU usage, memory consumption, and disk I/O during peak monitoring periods.

Log rotation and cleanup prevents storage exhaustion:

# Configure logrotate for native installation

sudo nano /etc/logrotate.d/uptime-kuma

/var/log/uptime-kuma/*.log {

daily

rotate 30

compress

delaycompress

missingok

notifempty

create 644 uptime-kuma uptime-kuma

postrotate

systemctl reload uptime-kuma

endscript

}Monitoring probe optimization balances monitoring coverage with system resources. Adjust check intervals based on service criticality and available system resources.

Troubleshooting Common Issues

Installation Problems

Node.js version compatibility issues manifest as build failures or runtime errors. Verify supported Node.js versions and use Node Version Manager (nvm) for version management:

curl -o- https://raw.githubusercontent.com/nvm-sh/nvm/v0.39.0/install.sh | bash

source ~/.bashrc

nvm install 18

nvm use 18npm permission problems occur when installing global packages. Use npm’s built-in solution:

mkdir ~/.npm-global

npm config set prefix '~/.npm-global'

echo 'export PATH=~/.npm-global/bin:$PATH' >> ~/.bashrc

source ~/.bashrcDocker installation errors often relate to service startup or permission issues. Check Docker service status:

sudo systemctl status docker

sudo journalctl -u docker --since "1 hour ago"AUR package build failures may result from missing dependencies or outdated PKGBUILDs. Check AUR comments for known issues and solutions:

yay -G uptime-kuma # Download PKGBUILD for inspection

cd uptime-kuma

cat PKGBUILD

cat .SRCINFORuntime Issues

Port conflicts prevent service startup when other applications use port 3001. Identify conflicting processes:

sudo netstat -tulpn | grep :3001

sudo lsof -i :3001Change the port in configuration files or stop conflicting services.

Database corruption requires restoration from backups or database repair procedures. For SQLite databases:

sqlite3 /path/to/database.db ".backup backup.db"

sqlite3 backup.db "PRAGMA integrity_check;"Memory and performance issues may require increasing system resources or optimizing monitoring configurations. Monitor system resources:

htop

free -h

df -hSSL certificate problems affect HTTPS monitoring. Verify certificate validity and trust chains:

openssl s_client -connect example.com:443 -showcerts

curl -I https://example.comMonitoring False Positives

Network timeout issues with Cloudflare and other CDNs require adjusted timeout values and retry counts. Increase timeout settings for services behind CDNs or with variable response times.

Docker network misconfiguration prevents containers from reaching external services. Verify Docker networking:

docker network ls

docker inspect bridgeContainer debugging tools help diagnose networking issues:

# Execute commands inside running container

docker exec -it uptime-kuma /bin/sh

# Test network connectivity from container

docker exec uptime-kuma ping google.com

docker exec uptime-kuma nslookup example.comDNS resolution problems affect hostname-based monitoring. Configure reliable DNS servers:

# Test DNS resolution

nslookup example.com

dig example.com

# Configure DNS in Docker Compose

services:

uptime-kuma:

dns:

- 8.8.8.8

- 1.1.1.1Comparison with Alternatives and When to Use Uptime Kuma

Uptime Kuma vs. Alternatives

Uptime Kuma vs. HertzBeat reveals different strengths in monitoring approaches. HertzBeat focuses on infrastructure monitoring with extensive metric collection, while Uptime Kuma excels at service availability monitoring with superior notification systems.

Feature comparison with Gatus shows complementary capabilities. Gatus provides advanced health check definitions with Kubernetes integration, while Uptime Kuma offers more user-friendly interfaces and broader notification support.

Statping-ng comparison highlights similar target audiences with different implementation approaches. Both serve small to medium deployments, but Uptime Kuma provides more active development and community support.

When Uptime Kuma is the right choice:

- Simple deployment requirements with minimal configuration

- Strong notification system needs with diverse communication channels

- Active development community and regular updates

- Docker-first deployment approach

- Budget-conscious deployments requiring robust functionality

Limitations and alternatives:

- Large-scale enterprise monitoring may require specialized solutions like Zabbix or Nagios

- Infrastructure monitoring needs complex metric collection systems

- Compliance requirements may mandate specific monitoring platforms

Use Cases and Scenarios

Small to medium-scale monitoring represents Uptime Kuma’s primary strength. Deployments monitoring 50-500 services benefit from the balance of simplicity and functionality.

Internal service monitoring for development teams provides visibility into application dependencies and service health without external SaaS costs.

Cost-effective SaaS alternatives make Uptime Kuma attractive for organizations seeking to reduce monitoring expenses while maintaining service quality.

Integration possibilities with existing infrastructure through webhooks, APIs, and notification systems enable seamless workflow integration without major architectural changes.

Congratulations! You have successfully installed Uptime Kuma. Thanks for using this tutorial to install the latest version of the Uptime Kuma monitoring tool on Manjaro Linux. For additional help or useful information, we recommend you check the official Uptime Kuma website.